| Resurrection Home | Previous issue | Next issue | View Original Cover |

Computer

RESURRECTION

The Bulletin of the Computer Conservation Society

ISSN 0958-7403

Number 37 |

Spring 2006 |

| Top | Previous | Next |

This year's Annual General Meeting will take place at the Science Museum on Thursday 4 May.

-101010101-

The week before, the Society is hosting a one day seminar at the BCS headquarters in Southampton Street, London on best practices in computer conservation. The objective is to assist in formalising procedures used for establishing and running working parties. Those wishing to attend need to register. For more information go to (dead hyperlink - http://www.ccs-miw.org).

-101010101-

Members interesting in studying historical computing artefacts will be interested to know that they can attend tours of the Science Museum's facilities at Wroughton, near Swindon, and at Blythe House in London, as well as visiting the Kensington museum itself.

Many of the Museum's largest objects are stored at Wroughton, a site which consists of 11 large hangars. They include early computing devices such as the Powers-Samas PCC and the Russian BESM supercomputer, as well as non-computing objects such as a Lockheed Constellation airliner.

Wroughton opens on the first weekend of each month excluding September, starting in March. Entry is free, and guided tours of the collection are held on these days. For more focussed requirements, private tours are available on request at a cost of £5 per head (£3 for concessions). Tours that include a visit to Hangar L1 offer an opportunity to see some of the Museum's larger computing objects.

Blythe House contains some 170,000 objects, more than 10 times the number on display in the Kensington museum. There is a regular programme of tours covering many different types of scientific object, again on Wednesdays, starting at 1245 and 1445, at a cost of £10 per head.

Tours at both sites have to be booked in advance. For further information go to www.sciencemuseum.org.uk/wroughton or (dead hyperlink - www.sciencemuseum.org.uk/collections/ingenioustours.asp).

-101010101-

From next year there will also be a National Computer Museum to go to. Agreement was reached in January between the Bletchley Park Trust and the Codes and Ciphers Heritage Trust that Bletchley Park's H Block will become the home of the new enterprise, opening in early 2007.

The Museum will allow visitors to follow the development of computing from Colossus (which H block was originally built to house) through the mainframe era into the PC/Internet world of today. Many of the exhibits will be provided by the Society, which has a team of about 20 volunteers working at Bletchley Park.

For further information, go to www.nationalcomputermuseum.org.

-101010101-

Jack Kilby, inventor of the integrated circuit, died in June 2005 aged 81. Kilby, employed by Texas Instruments, demonstrated his invention for the first time in 1958.

-101010101-

Brian Spoor, a contributor to the Resurrection issue 36, is developing a Web site for ICL 1900 machines and the George 3 operating system. It can be found at (dead hyperlink http://www.fcs.eu.com/index.html).

-101010101-

The Annals of the History of ComputingWe are now entering the fifth year of an agreement under which members of the CCS can subscribe to the always interesting IEEE Annals at a concessionary rate. For 2006 the cost is likely to be £30, which will cover four quarterly issues and the associated UK postage. Existing subscriptions are being renewed automatically, as in previous years. Any other member wishing to join the scheme should contact Hamish Carmichael at . There are still a few copies available of the "Reconstructions" issue, covering Thomas Fowler's ternary calculating machine, Konrad Zuse's Z3, the restoration of the IBM 1620, replicating the Manchester Baby, the rebuilding of Colossus, and the construction of Babbage's Difference Engine - all fascinating stories. Contact Hamish Carmichael. The one-off price is £10: first come, first served. |

| Top | Previous | Next |

Arthur Rowles

The powering-up sequence continues faultlessly at practically every initiation. We have removed the precautionary resistors which shunt the 12E1 controller of the drum motor field current, and have replaced suspect potentiometers in the Clock and Address track head amplifiers.

After contriving to have the drum motor turning at the specified 4600 rpm, we took the opportunity of tuning the primary and secondary windings of the input transformer to the Foster-Seeley discriminator, regulating the field winding current. As a result, we have seen some evidence of the motor being regulated at this speed, and are just beginning to employ the correct 333 KHz clocking pulses to explore the progress of the Address Track signals via the logic elements in Cabinet 5.

From time to time progress has been arrested by the occurrence of a spurious signal at the 6 microsecond period: this overwhelms other signals, and is probably the result of self oscillation somewhere. This is not the only puzzle. Some or all of them may be traceable to irrational head-lead screen earthing policies adopted in different sections of Cabinet 6. We press on.

After almost four days work per month for now approaching two years, it has to be admitted that recent progress has been somewhat disappointing. Some months the log reveals no discernible forward movement.

Contact Arthur Rowles at .

David Holdsworth

The problem with the resurrected Algol 68-R system has now been resolved. There is a beta-test version at the blind URL: www.bcs.org.uk/sg/ccs/a68demo.zip

Please note this is not yet available to the general public owing to questions of copyright, so please do not put any links to it other than your own personal bookmark.

You need some experience of George 3 in order to use it. The "readme" file will be enhanced in due (overdue) course.

Contact David Holdsworth at .

John Harper

As I write this report, the machine is in a state of hibernation due partly to the Christmas and New Year break but also because a planned short loss of building heating turned into a major problem. However we hope at the time of writing to be back working on the machine in early February. Meanwhile the machine is closed up with an oil filled electric radiator inside to make sure that there isn't any damp and resulting corrosion. Progress has been delayed slightly but not enough to give us any concern. We have a target to be running real jobs by the end of 2006, with a full complement of external parts if possible, and we are on course to achieve this. At the same time we are about to enter a period of system commissioning: here we feel less able to estimate timescales.

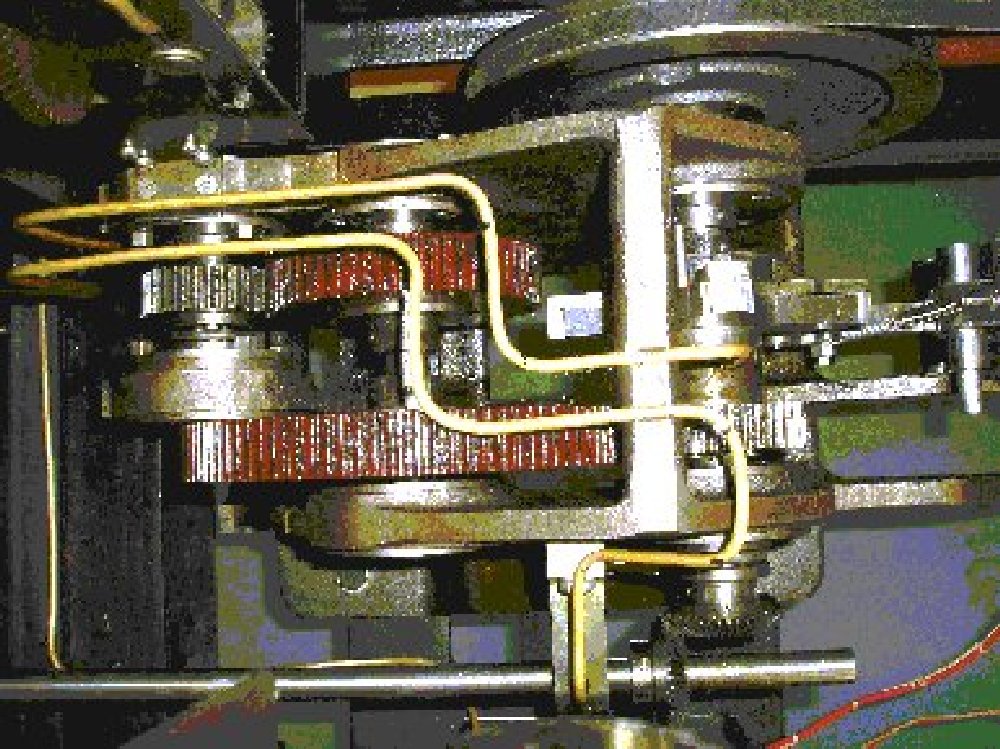

Some work has resumed off site since the Christmas break. Drum and Menu Cable construction continues at its planned steady rate, and still with no expectation that this will delay our overall programme. One particularly interesting activity has been the production of a very detailed punch and die needed to make the special Beryllium Copper contact brushes for the Checking Machine drums. As we need over 1000 of these brushes to complete the Checking Machine, a slick manufacturing process is required. We have to admit that we had some problems with the first example of this punch, but we now know what is needed to correct this and a second example should not take very long to produce.

Before Christmas and since our last report we have been fitting relays and carrying out simple tests whilst in parallel completing the commissioning of the mechanical parts of the machine. The big mechanical exercise was to set up the medium and slow drum ratchet drives so that drum brushes in all 72 cases lined up accurately with commutator studs after they had been advanced one place. This exercise went well with just one part being outside tolerance. This was quickly replaced with a spare assembly. The fast drums have now to be accurately centred so that their brushes are exactly in the centre of the commutator studs when a sense pulse is applied. We are ready to start this exercise and when complete this will virtually finalise all mechanical commissioning.

A big step forward has been achieved with all the Siemens Sense Relays completed and with their unit testing about to commence. As relays pass their tests they will be fitted to the machine. When 26 or more are fitted a simple test menu can be created and an attempt at a full system test tried. We have available from the 1940s what would today be called acceptance tests. These we will run soon but how well or quickly we will progress is, for us, uncharted territory.

When we started these reports we did not have the ability to include photos. Therefore I will backtrack a little and include a couple of examples of earlier work. Note that everything that can be seen, other than the ball bearings, was made by our team from bare metal.

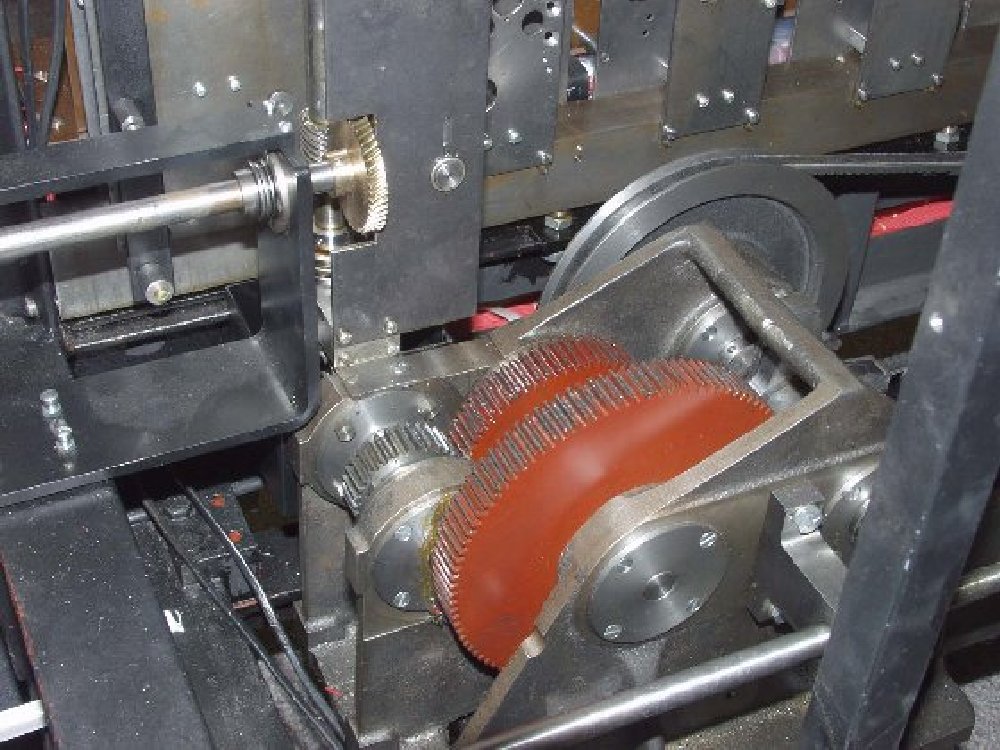

Below is a view into the rear of the machine, showing the main gearbox.

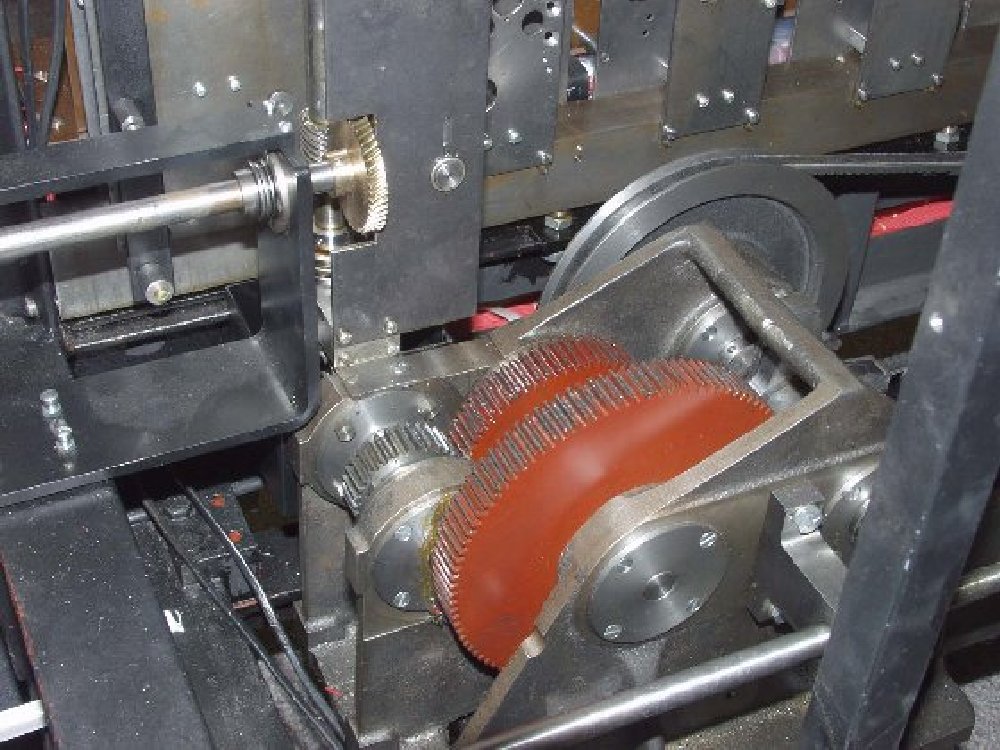

Below is a view from above looking down into the main gearbox.

Our Web site, though not very up to date, can be accessed at https://www.bombe.org.uk/.

Len Hewitt & Peter Holland

The Pegasus continues to be demonstrated working usually every two weeks, but we still have no facilities for tape preparation, since the replacement motor for the Trend 700 we obtained was also found to be faulty. However we now hope to obtain a replacement from the manufacturers; as this will not be identical to the original it will require some modifications to fit it. This will have to await Len Hewitt's return in April.

Contact Peter Holland at .

| Top | Previous | Next |

Konrad Zuse was one of the earliest computer designers, and unlike most he intended from the start to build small machines for use by individuals. The author runs over the main features of Zuse's range of four machines, and describes efforts made in Germany to build reconstructions of them.

The machines built by Konrad Zuse ranged from the Z1 to the Z4, with two intermediate machines.

The Z1 was a very interesting piece of equipment, first of all because it was mechanical, and not a machine working with gears. Numbers were represented not in the decimal system but in binary. It was built in Berlin between 1936 and 1938.

The Z2 was a prototype, just a desktop concept for the Z3. The Z3 was the second real machine. It was a relay machine, and was built between 1940 and 1941. It was destroyed during the war in a bombing raid. Then there were two intermediate machines - the S1 and the S2; they were like the Z3, but they were not programmable, unlike the Z1, Z3 and Z4.

With the Z3 the program was stored in the machine, but it could be used repetitively. The Z4, built in 1945, was the final concept, the machine that he wanted to sell. He established a company in Berlin, because he thought that he could really sell these machines.

The Z1 was a mechanical design, with the program stored on punched tape. It had the basic arithmetical operations - addition, subtraction, multiplication, division. It was completely binary. It was not a fixed point machine like Eniac or the other American machines; it was a true floating-point machine - probably the first floating-point machine in the world.

The Z3 had the same architecture as the Z1: it was a move from a mechanical machine to a machine built with relays. It was logically equivalent to the Z1 - if you look at the architecture, the input/output, the way the machine works, and the program, it's the same machine, realised in a different technology.

It was about 2 meters by 2 meters, and was built inside the apartment of Zuse's parents. After it was built nobody could get it out. That's why it stayed in that apartment until 1943 or so when the machine was destroyed in the bombing. In a street in that part of Berlin there's a plaque identifying where the machine was built.

But the Z3 was not reliable. Zuse could build a machine with relays to show some operations working, but the machine would never work for long: the mechanics would get stuck.

At this time Zuse was also thinking about vacuum tubes. He made a proposal to build a machine using vacuum tubes, and was asked how many tubes he needed. He estimated around 1200, and was told that that was impossible to build. A few years later the Americans built the Eniac with 18,000 tubes!

That is why Zuse decided to use relays instead of tubes, and also, because he was happier with mechanics. Zuse was a mechanical engineer; he was better with these things than with electronics.

In the architecture of the Z1 and the Z3, the program was stored on punched tape in binary. There was a control unit which read from the tape, interpreted each instruction and communicated with different parts of the machine. There was a memory which was separate from the processor - something which Eniac, the Mark 1 and the other early American machines didn't have: in those machines memory and processor were integral. With Zuse's computers the memory was separated from the processor, as in a von Neumann architecture. It consisted of 64 words.

The processor was floating-point, as I have said. It had separate electronics for the exponent and the mantissa. Input was through a numerical keyboard: output was shown on a numerical decimal display.

The main part of the machine was wholly binary; the only decimal part was the input/output. The most difficult part of the machine was the conversion from decimal to binary and from binary to decimal. These were the two operations which required most logical elements in the machine.

This machine as a whole was very similar to what we are used to today. The only difference is that the program was outside the machine rather than in the memory. Zuse thought about putting the program into memory, but he only had 64 words, and that would mean that the problems it could handle would be very small, so he decided to leave the program outside the machine.

How could you build a binary machine using mechanics? It was like playing three- dimensional chess, because you had a lot of parts which were acting on other elements, and then the machine was built in several layers, in fact many layers of mechanics. When you were acting on a given element, sometimes you had to go from one layer to another, and then come back. There were four directions of movement in this machine, so it was very complex.

Actually, nobody in the world understands the Z3! The machine itself was destroyed in the war. Zuse made a reconstruction of it in the 1980s, when he was around 70. He never documented it: that's why some people say he was the first computer scientist - he never documented anything! The reconstruction is now in the Deutsches Museum in Munich. If you want to understand the machine you have to go there and take it apart. That is what we did.

The Z1/Z3 was a very interesting concept. I think it was the only large-scale binary machine which was built using mechanics in the 20th century.

The machine worked like this: you put a punched tape on the tape reader. Then you started the machine, and when it needed an input it would just pause. So then the operator would enter a number via the keyboard, and press the Continue button. The machine would resume processing. When the machine was ready to show a result it would stop and show the result on the decimal display.

One good feature was the internal format of the numbers. Each number was stored with the exponent using seven bits and the mantissa using 14 bits, plus one bit for the sign of the number. Modern computers work with floating point numbers using a very similar format, though the number of bits is different - typically eight bits for the exponent and 22 bits for the mantissa, plus again one bit for the sign. Both formats, Zuse's and the modern one, do not store the leading '1' in the number.

All floating-point numbers were held in such a way that they did not need the '1' at the beginning. When you unpacked the number from the memory to the processor, you had to add the leading '1' so that the processor could work with it. This form means that you need a special representation for zero, because zero has no leading '1'.

The representation used by Zuse was to take the minimum exponent, the smallest exponent that could be fitted in the machine. This is not the actual convention used in modern computers, but it's very similar.

The Z2 was a fixed point processor. It was the same in principle as the Z4, but it was a kind of hybrid machine. The memory was mechanical, but the processor was built using relays. This highlights a very important point about Zuse.

Zuse didn't want to build mainframes for big institutions. He wanted to build small machines for individuals. That was the market he had in mind. He was quite sure that mechanics could be miniaturised, but he was not so sure about relays. So he thought that by hiring some Swiss guys who were used to building clocks he could miniaturise the size of the memory to a few cubic centimetres, and then put a version on the desk of every engineer.

That was his vision, and there are some papers showing that the Z2 was the desktop computer that he wanted to produce. It used a mechanical memory because it was cheaper and smaller, and a processor built out of relays.

About the Z3 instruction set I've already said something. There were the arithmetic operations - addition, subtraction, multiplication, division and square root. He had a way of representing these operations like assembler code, as we would call it today. Then the code was punched into the machine.

There were two instructions for handling numbers, one for getting numbers from the memory to the processor, and the other for putting numbers into the memory. There were the two instructions for input and output - binary to decimal for output and decimal to binary on input. So there were only nine instructions for the whole machine.

There was no branching instruction. But one thing you could do was to put both ends of the tape together to make a loop. There was also a way of branching using arithmetic, allowing you to reduce a positive number to 1 and any other number to zero; using this trick you could do 'branching', not in reality but by simulation. The machine had no branching as such. The first machine with branching was the Z4.

The processor was built using only two internal registers, with an exponent part and a mantissa part in both. There were two adding units -so you could do multiplication by adding the exponents, and you could do addition and subtraction by handling the mantissa. It is very similar to the way we handle floating point in today's machines, where we separate the handling of the exponent and the mantissa.

I think the most important part of the machine for making it small was the microsequencer. The instructions were hardwired up to a point, but then there was sequencing between micro-instructions for what the machine had to do. Executing one instruction meant activating several micro-instructions, and for that there was a microsequencer, which was a row which could move in every cycle. So you could run through the micro-instructions for step one, and then the micro-instructions for step two, and so on. This allowed you to have quite complicated instructions. Multiplication, for example, was an instruction that used 18 steps.

This meant that the machine could be made very simple, because any time you needed a new instruction you just put in another micro-sequencer. You didn't have to hardwire the machine again. Thus you could expand the machine and make it more powerful.

That principle was carried through from the Z3 to the Z4. The Z4 was actually just a Z3 with a lot of extra micro-sequencers giving many more instructions and so many more possibilities for the machine.

We have done some reconstruction in the Berlin Computer Science Institute. Our Z3 is not a one-to-one replica of the original Z3. The design and the logical operations are exactly the same, but we did not use the original relays, preferring modern relays which are much smaller.

Again we didn't try to do one-to-one reconstruction of the memory that exists in the Deutches Museum in Munich, because first we didn't want to copy the Museum's machine, and second we wanted to reconstruct the machine as a logical instrument. So we have put a lot of lamps on every logic element.

The machine can be run in the original 'beat' of the Z3; it can also be run single-step, and it can be run very slowly. So you can follow the flow of information through the machine, watching the exponent part of the machine and the mantissa part, and you can follow the individual instructions. You can also see what has been stored in the memory.

We put not a single mechanical component on the machine, because we want it to run for decades. That is the problem with the original machine - it doesn't run any more. There used to be an old man in Munich who repaired the machine. Then he left the Museum, and the machine failed, and nobody has been able to repair it since. The whole of our machine is documented! That was part of the problem with the other machine.

Let me tell you what we are doing in this work at the University. We have reconstructed the internals of the machine, and documented them. We published a book in 1998 with the full schematics of the machine, so that anybody who wants to can reconstruct it: the circuits are known.

The other problem is that we are a computer science institute, and I work with computer science students, and I have been giving them computer history seminars for many years. My experience has been that students in computer science tend not to care very much about history. They want to know the latest about machines; they want to play with the latest operating system and the latest programming language. So every time I gave a computer history seminar there were very few students!

So what I started doing was not just reviewing the history of computing, but doing simulation of the machines I was discussing. Now when I have a seminar the students have to write a simulation in Java (or any other computer language) to illustrate how the machine worked. So we have produced for example the first working simulation of the Eniac, as well as working simulations of parts of the Z3 and the Z1.

We have also a simulation of the mechanical components of the Z1. This machine was built with the help of many students, who collaborated in this programme, debugging the electronics and doing much of the work that was necessary in order to get the reconstruction working.

So that's why my approach to historical reconstruction is: Get the logic right. Get to know how the machine worked. Document the machine, so that in the future people can marvel at how much intelligence was put into the design of the machine. And reconstruct the thing in such a way that when people go and look at the machine they understand what is going on inside it.

That's our approach, and in the case of the Z3 I think it has paid off.

Editor's note: This is an edited transcript of the talk given by the author to the Society at the Computing Before Computers seminar at the Science Museum on 12 May 2005. The Editor acknowledges with gratitude the work of Hamish Carmichael in creating the transcript. Professor Rojas can be contacted at .

| Top | Previous | Next |

Information Technology did not begin to appear until the second half of the 20th century, but information processing as we understand it started at the beginning of the 19th. The author describes some of the major UK data processing systems in the days of the horse-drawn cab.

The classic picture of the Victorian clerk is essentially of a solitary worker with a tall stool and a sloping desk, filling in details in a bound ledger.

Indeed an insurance office in the 1820s did typically have less than a dozen clerks. Those clerks were all multi-skilled and multi-talented; they would each take an insurance application and pursue it through all the various processes of underwriting. But by 1890, the Post Office Savings Bank had a workforce of 1800 clerical staff. The picture had changed radically. And it all seemed to take off round about the 1850s.

When I talk about Victorian data processing I'm talking about information processing in the way that we mean it today - very high volume, very efficient, low cost. The difference in Victorian times was that it was done without machinery; it was done purely by human activity and by pushing pieces of paper around.

Those systems were extremely robust. They were sophisticated in the way that today's information processing systems are sophisticated, and there might actually be some things that we can learn from them. Particularly their robustness: there never was a systems crash in the Post Office Savings Bank.

One of the first large scale processing systems was developed at the Bankers' Clearing House. It originally started in the 1830s, when there were a number of banks in London.

At that time, if you drew a cheque, eventually that cheque would find its way back to your home issuing bank, and they would exchange that cheque for money. That was how settlement was done.

What actually happened was that a clerk would walk from your bank and make a complete tour of all the other banks in London, exchanging cheques for money. There was one walk clerk from each bank. It was a highly unsafe occupation. They'd all meet in the Five Bells public house on Lombard Street, and they'd exchange all the cash there. In the 1830s the banks formalised this arrangement with the Bankers' Clearing House, also in Lombard Street.

Charles Babbage was one of the first people to realise what a significant innovation this was. He wrote the first account of the clearing house concept, in fact it's about the only one in the literature, because it was a highly secretive organisation for security reasons, and Babbage was about the only person who ever got access to it. He wrote about it in his Economy of Manufactures.

A few years later, the level of activity had greatly increased. The Bank Clearing House in 1868 dealt with about 200 clerks, so each individual bank was using several clerks. What they had at last realised is that you don't need to exchange money, as they had been doing for years; all you need to do is exchange the difference. So they evolved an elaborate information processing mechanism, in which you registered all the money that was owed to you at the central desk, and registered all the money you owe at the same central desk. What the clerk did then was collect the difference.

The banks then realised that you only needed to exchange relatively small amounts of money. Instead of changing hundreds of thousands of pounds in the day, it turned out that on balance these things mostly cancel each other out.

Not long after that they realised that if all the banks had an account at the Bank of England, then they did not need to have any money at the Clearing House at all. So the idea of moving money bags was transformed into an information processing task, and they were simply moving numbers around on pieces of paper.

I've studied a number of these large organisations, and the question is: why did they all suddenly take off in the middle of the 19th century? I believe it was the effect of the Industrial Revolution, when for the first time, instead of an affluent upper class and an impoverished working class, you had a relatively prosperous working class. There were suddenly millions of consumers. They hadn't got very much money, but they were mobile. The railways, the post and the telegraph had arrived, and people were starting to move around and send holiday postcards.

Previously people only ever had just enough to live on. Now suddenly they were saving for bank holidays and furniture. So you now needed clearing banks for the cheap consumer goods that they were buying, and credit enforcing agencies. People were also being encouraged to save, and there emerged the Thrift Movement, savings banks, life insurance, and the whole idea of registration to keep tabs on this newly mobile population. The common theme that connects all of these activities is the processing of millions and millions of transactions in a way that never happened before.

The General Register Office, founded in 1837, maintains a record of every vital event - births, marriages and deaths, "hatches, matches and dispatches". There is a huge information load there, and it's cumulative. It started off quite small, but after 50 years of births deaths and marriages the information had become substantial, and was growing all the time.

One of its main information processing tasks was the Census. The Census Office was used to create an age profile of the population. You can imagine all kinds of planning activities that needed to know that.

The GRO had one set of clerks, called the abstracting clerks, who took the census forms and recorded all the data. They calculated totals as well. The clerks created a sheet of paper for each district, and as there were several thousand districts in the UK, you got several thousand sheets of paper that another group of clerks then had to deal with. It cascaded down through three levels of data concentration in order to generate the final tabulation. It was an entirely manual system.

The Railway Clearing House was a fascinating organisation, because it was a response to a problem when the railways were not integrated. That is exactly the problem that we're dealing with at the moment, and today we're dealing with it by American software. The way it was done before nationalisation of the railways, indeed from the 1840s, was by means of the Railway Clearing House. You can see the analogy with the Bankers' Clearing House.

One of the problems they had was the 'through traffic problem', as they called it. The small railway companies were connected, but say for example that you wanted to go from London to Aberdeen, you had to change trains nine times as you moved through the different company areas. What was obviously needed was integration of the system, so that you didn't have this business of changing from one train to the next. That is what the Railway Clearing House achieved.

The operators who owned the rolling stock could now use any piece of rolling stock that happened to be lying around and attach it to their train. At every intersection on the railways, and particularly at the joins between companies, there were people called 'number-takers', who wrote down the numbers of the engine and of all the individual pieces of rolling stock as the trains rushed by. That information was then transmitted to the Railway Clearing House. So they were developing a picture of where every piece of rolling stock was upon the line.

When you bought a ticket, say from London to Aberdeen, it was agreed that the ticket price would be divided in various proportions between the railway operators. So when that ticket was collected, it was in effect an open cheque. That is, in fact, why the tickets were collected. The physical tickets would go down and be converted into money, and that money would be allocated among all the operators who had contributed to that operation.

More than that, railway proprietors had to decide whether to buy rolling stock or not. If there was a temporary shortage they would just buy new rolling stock and enter it into the system. If somebody else used one of their carriages, that was OK because they got a rental for it through the clearing system.

Again it was an extremely robust system: it was very rare that an item of rolling stock actually got lost in the system. They had some very experienced clerks, so that if something did go missing, they could go through the paper trail and find out where the thing could have got to.

The office they used is still there, up Eversholt Street alongside Euston Station in London. It was said to be the largest office in the world at one time, and there were something like 200 clerks there shuffling pieces of paper around and so actually achieving this amazing feat of integrating the whole railway system.

In the 1870s the telegraph system started to take off. The Central Telegraph Office, an enormous building in St Martin Le Grand, was the heart of the system. It was effectively a switch for routing telegrams, equivalent to an Internet hub of these days.

If you wanted to send a telegram from, say, Oxford to Cambridge, those two cities were not connected by a direct line. So someone in Oxford would send a telegram to the Central Telegraph Office, and then the Central Telegraph Office would send it on to Cambridge.

Predominantly female telegraphists received messages in serried ranks. There were messenger boys constantly circulating the room. Whenever a new message came in a messenger boy would pick it up and take it to the desk at the front. There was a set of sorting boxes there, each of which was for one city. So when a message came in from Oxford destined for Cambridge, it was popped into the Cambridge box.

Another set of messenger boys collected telegrams from those boxes: one would have taken our Oxford message to the Cambridge transmission station. There was a delay of a few minutes, but it was a real store-and-forward messaging service.

By 1930, people were using different telegraph instruments, based on paper tape, but the principle was the same. This is what I call the persistence of systems - they got the basic idea right in the 1870s, and they just carried on till the 1960s, though there were constant technical improvements, laying new technology on top of the old.

We've forgotten about the Thrift Movement today, but people used to save for what they wanted to buy. It was a huge movement at the time, and Samuel Smiles' Self-Help and Thrift were two best-selling books that promoted the idea.

The Prudential Insurance Company was a key part of this movement. The company realised that the newly affluent working class wanted burial insurance, and the average policy value was about eight guineas (£8.40). But the hard-pressed working class was not capable of saving in shillings, and needed to pay tiny premiums weekly.

Compare this with the situation at the beginning of the century, when the Equitable Insurance Office had eight clerks, and the average annual premium was £5, paid once. Half a century later the average premium was one or two pence, paid weekly, so there was an enormous difference in the volume of transactions.

The Prudential of the 1880s was much more like a modern insurance company than the Equitable of the 1820s. There were clerks who specialised in a particular function: ledger clerks, correspondence clerks, claims-handling clerks and so on, trained in a narrow niche of the business.

Most interesting, I always think, was the Transcript Division, which consisted of 110 clerks, half the office's labour force. What they did was document copying: they were a giant copying machine. They employed low-grade clerks - often 14-year old school leavers - and their job was to make copperplate copies of documents that were sent down for copying. By 1880 they had something like a million policies in force, and several hundred clerks. This had become a truly enormous operation.

My conclusion is that data processing doesn't necessarily involve machinery. I think what information technology does is to enable you to increase the granularity of information processing. So today we can write cheques that cost next to nothing to process. That's really what computers are doing. The underlying information systems aren't changing.

If you look at BACS - the descendant of the Bankers' Clearing House - in the 1980s, it had then an ICL mainframe, but the underlying information system had not really changed from a century earlier. The social historian EP Thompson once wrote about what he terms "the condescension of posterity". Sometimes we look back on early technology in a condescending way, as quaint and charming and not quite real. But we need to avoid this temptation. We should walk past the old Bankers' Clearing House building in Lombard Street as we would walk past an Atlas computer in a museum, and stand in awe. Though you can now get an Atlas on a microchip, that doesn't negate the achievement. So next time you go to Holborn and walk past the Prudential building, think of that as a mainframe of the 1870s.

Editor's note: This is an edited transcript of the talk given by the author to the Society at the Computing Before Computers seminar at the Science Museum on 12 May 2005. The Editor acknowledges with gratitude the work of Hamish Carmichael in creating the transcript. Martin Campbell-Kelly can be contacted at .

| Top | Previous | Next |

The Society has its own Web site, located at www.bcs.org.uk/sg/ccs. It contains electronic copies of all past issues of Resurrection, in both HTML and PDF formats, which can be downloaded for printing. We also have an FTP site at ftp.cs.man.ac.uk/pub/CCS-Archive, where there is other material for downloading including simulators for historic machines. Please note that this latter URL is case-sensitive.

| Top | Previous | Next |

The computer manager at Littlewoods recalls the difficulties involved in running his late 1950s computer installation. Much ingenuity went into developing techniques to overcome the chronic unreliability of the hardware and the impossibility of ensuring error-free data. But despite all the inefficiencies and difficulties, the computer more than paid for itself by allowing a reduction in stock levels.

In March 1958 Littlewoods Mail Order Stores installed an Elliott 405 computer. Littlewoods was an aggressively commercial company driven by its owner John Moores. The brothers John and Cecil Moores had made their fortune with Littlewoods Football Pools but by 1958 John Moores was devoting his time to the mail order and chain store business and to Everton FC. There was no starry eyed vision of the future in the ordering of the Elliott 405. It was bought for stock control. It was bought to make money and it did make money, but not perhaps in the way expected.

A back of an envelope calculation, years later, suggests that such a claim of profitability was not unreasonable. The purchase price of the computer was £110,000, creating an annual cost of between £10,000 and £20,000. The Computer Department had a staff of about 60. It is unlikely that staffing costs of the Computer Department exceeded £50,000.

The mail order business alone had a turnover of £75 million and stock levels were generally about six to eight weeks. The cost of saving a week's stock (at 5%) would have been about £70,000. It was generally accepted that savings greater than this were made. Added to that was the contribution from the Chain Store business, though that was less impressive.

At the time the Elliott 405 at Littlewoods was considered top of the range. It had a magnetic disc of 16,384 words of 32 bits, four times the capacity of the normal magnetic drum of other machines, and a double-sized main memory of 512 words. The nominal add time was 1/2000 sec, which was rather pompously described as 500 microseconds. When the order was placed for this fantastic machine no one was so naïve as to expect that this meant that stock control of a department of 2000 items could be achieved in a few seconds. But it took many months before it was accepted that it would take several, if not many, hours. Fortunately those of us who were responsible for delivery of results had not been party to the decision to buy when the order had been placed 18 months beforehand!

Comparing history with the present is intriguing even if not relevant to the issues of the time. The computer and print room occupied about 300 square yards. To have achieved the power of a modern laptop would have required 10-storey buildings throughout the square mile of the City of London. But knowing what was to come would have been of no concern. It would not even have been demoralising. It was irrelevant. There was work to do.

The main files were held on magnetic films. These were also used for sorting input. They were standard 35mm films as used in the cinematic industry with a magnetic coating, and performed the same function as magnetic tapes on other machines. One film held 2048 blocks of 64 words on both a forward and reverse track. At four to five blocks per second it took 15 minutes to read a whole reel at full speed.

Keeping the film running at full speed was important, as the time penalty for missing a block was large. There was no inter-block gap to allow the tape to stop and then accelerate. If the start of a block was missed, the film halted, powered into reverse for a short distance and then set off again in forward gear. In practice there was time to transfer a string of blocks from the disc but little else.

Data was input on five channel paper tape. The results were written to film, converted offline to paper tape and printed on a battery of typewriters. It was all very primitive.

Liaison between programmers and engineers was close. There was no Microsoft empire of software to come between them. Programmers numbered the bits of a word (0 to 31) from the most significant end, relating them to the significance within a binary fraction. But the engineers knew them as pulses at digit times (1 to 32) arriving at intervals of 16 microseconds. These differences were trivial. When things went wrong, and they did quite often, the problem would be handled together.

Programming did not require a lot of knowledge, but much ingenuity and care. The number of functions was severely limited to the basic read, write, add, shift, jump, conditional test and so on that could be described by the four bits available to represent them within a 16-bit instruction. Two single-address instructions were stored in a 32-bit word with the classical three machine cycles to process them (load and modify the first, obey the first and modify the second, obey the second). To speed access, sequentially addressed words were staggered three apart around a 16-word capacity nickel-delay line. Optimum timing was important but it soon became obvious that this was only practical within the innermost loops. An extra cabinet of short delay lines provided 16 immediately accessible words that could be used to improve the timing.

Other functions in a different format processed disc, film and input/output. Yet another special cabinet provided enhanced compiler functions to aid and speed the processing of input output. These were of limited use. Checking the individual characters and assembling a decimal number was too sophisticated a process for a single machine command. Using a single command to find a specified character was fast but fraught. Too easily could a paper tape or film be set running out of control.

Elliotts provided a small library of utility programs and subroutines, but only the two programs for translating input of programs were regularly used. Known affectionately as Albert and Roger after the authors, these set the form in which programs were written. Hidden within the other routines were intricate snippets of code that were extensively copied: the routines themselves were not used.

Programmers were on their own. They had half a sheet of machine code instructions and ready access to the engineers. Who could ask for anything more?

Having a computer on site and not having to rely upon a bureau machine increased dramatically the pace of development. A number of factors soon emerged that affected the strategies and techniques adopted:

producing error free input paper tapes was nigh on impossible

the computer itself was unlikely to run for more than 15 minutes without stumbling

the output would be riddled with errors.

Fortunately formal accountancy was not involved, so that techniques could be used that would have been forbidden by auditors. A determination grew to achieve success whatever the difficulties and it soon became obvious that, with so much to gain, expensive solutions could be justified. The saving grace was the length of the normal non-working week. Three quarters of a week is outside normal working hours. It would have been unthinkable to have employed clerical workers for a substantial part of this time but running a computer with a few staff was perfectly feasible. Production was consequentially scheduled for nights during the week and day and nights throughout the weekends.

Probably the most accurate form of data preparation at the time was offered by punched cards, particularly the punching followed by off-set punching and then auto-verification of Powers-Samas. Cards in error were rejected and were punched again. To emulate this two-stage process, five channel paper tape produced by one operator was checked by another with the machine locking if a difference was detected. A second tape was produced at the same time that was supposed to be error free. Unfortunately it was not. A further check was introduced with a third operator keying the data yet again. If errors were then found a length of tape (usually about 500 characters) would be rejected and the process started from the beginning.

The resultant input tapes were then much fragmented and disordered so they had to be indexed and checked for completeness when the tapes eventually reached the computer. This could be several hours later when the data preparation staff had departed. However unsatisfactory, the operator processing a tape with an error had to correct it somehow or other. Correcting fluid, sellotape and unipunch were all brought into play. Waiting until the following day was not an option.

Paper tape was notoriously difficult to handle. Special boxes were designed to hold the variety of lengths waiting processing. Rewinding was soon seen to be a useless chore so the loose tapes were stored in large paper sacks to be rewound and used again if needed.

There must have been better ways of using paper tape, but they were not obvious at the time. Several years later, I was surprised to find (at Boulton Paul in Norwich) that an unattended Stantec Zebra would slowly read a 2' diameter reel of tape all through the night.

We very soon developed procedures to cope with a computer that was liable to malfunction at any time. The traditional format of 'grandfather', 'father' and 'son' files was of course adopted from the start. The later techniques of 'write rescue' and 'restore' were inappropriate. There was neither the time nor the capacity to make a full copy of the work-in-progress at anytime.

Rather a typical process would be designed with a short 'Guides' block identifying the state of progress.

A typical Process for a file update would then be:

Set the 'Guides' to the initial settings

**Position all Files (Old, In and New) according to the 'Guides'

(Start of Item)

Set the 'Guides' to the present setting

Copy next Item from Old-File to Disc as Old-Data

Copy next Item from In-File to Disc as In-Data

Merge In-Data and Old-Data to create New-Data

Output results from New-Data

Write New-Data to New-File

(Go to Start of Next Item)

Any error detected resulted in a dynamic stop with an error number being displayed. The merge routine was more complicated than the others and was more prone to errors. The instantaneous action by the operator was to restart the routine three times. If no progress was made the Item was restarted with the files being positioned (see ** above). If this failed the whole process would be restarted. The corrective procedures were carried out by an operator with a maintenance engineer in attendance who took control if the fault persisted.

The whole operating procedure was designed on the assumption that errors were normal and facts of life that had to be overcome using well regulated corrective measures.

Printed results were produced on sprocket fed stationery by 'Compuprinters' - electric typewriters with moving carriages driven by paper tape.

The computer wrote the information on to magnetic films. An offline film-to-tape converter punched four streams of paper tape that were then used to drive a battery of six Compuprinters (or as many as were operational at anytime).

The magnetic films used for this purpose did not have any block structure. A film was cleared completely before use by rotating it several times on an eraser that produced a very strong magnetic field - strong enough to damage the wrist watch of an unwary operator. Failure to perform this complicated procedure correctly was clearly evident an hour or so later when gibberish was produced and the computer process had to be rerun.

Occasionally characters were punched incorrectly but more often a character would be missed completely or a spurious character inserted.

Even after almost 50 years the short length of paper tape printed on the cover of Resurrection is clearly intelligible (when viewed with the page inverted)

<letter shift> THE QUICK BROWN FOX.......<cr lf><figure shift> 1234567.........

The character with all holes punched at the start of the extract is <letter shift> and was the character most commonly inserted in error. In consequence lines of numeric information would be printed on the wrong shift. This was so common that the stock controllers would routinely interpret

PMP PMP PMP

as 0-0 0-0 0-0

showing zero dozens and zero singles.

As all attempts to make the equipment more reliable proved ineffective, it was decided to detect and correct manually the errors on the printed sheets. Two ex- service officers were prepared to do this unusual job in the middle of the night. They became so adept that they also corrected stock-taking errors. These occurred particularly in the Chain Store Division. Before the Saturday rush the counters were replenished, stock levels recorded and the stockroom locked. But the counter- and under-stocks were not tallied until the selling peak had passed on Monday. If emergency issues had to be made from the stockroom they were unlikely in the rush to be recorded. The apparent high sales one week would be welcomed but the compensating low or even negative sales in another week would be discounted as anomalies.

Drills were developed for all the common operating tasks. Changing a magnetic film was not intrinsically difficult but it was easy to leave the track switch in the wrong position and for the film when activated to run off in reverse. Operators were taught to change films robot-like and did not make mistakes.

The computer day started at 0600 when the engineers carried out repairs and ran diagnostic tests for four hours. Most program development took place during the day from 1000 and production started in the early evening. The day finished at 3000 hrs (0600 the next day) when the engineers took over again.

Stock control for the Chain Stores was processed over night. If a serious fault developed there was little that could be done except continue into the day shift and perhaps reschedule the following night's work.

For Mail Order the bin cards arrived and were punched during Friday. There were then about 60 hours before the results had to be on the stock controllers' desks. At the start of the season early results were vital; at other times less so. Each Friday the Chief Stock Controller would agree some delay parameters. When production fell behind schedule by the agreed amount a warning would be issued to a standby programmer, operator and company driver. If the delay reached the next level they set off for the standby computer at Borehamwood. This procedure was invoked twice in three years but came close on many others. It did result in a restful night for the rest of the staff.

A less intractable problem was keeping the operators profitably occupied when all went well.

The Computer Department had a staff of about 60. This included about 15 managers and programmers, 20 key operators and a few clerical staff including the night- time checkers. Running a full 168 hours required five shifts to allow for holidays, sickness and standby for emergencies. Consequently there were 10 operators, five computer engineers (on contract) and five printer mechanics. No one had an annual salary over £2000 and most earned less than £1000 per year.

When Littlewoods came to replace the Elliott 405 (much sooner than originally envisaged) it was in a much better position to judge where it should go. But this alone would not have been sufficient justification for the large expenditure that had been incurred.

Controlling stock was not a straightforward process. Even if a controller knew what was going on his scope for action was often limited. Deliveries could be diverted or even cancelled but getting extra supplies quickly could be impossible.

Previously a stock controller would have been faced on a Monday morning with a pile of bin cards showing recent sales. He could search for what he thought were important items and make some urgent phone calls. He would then start on preparing a detailed list. Perhaps it was easier to add up figures than to take a difficult decision. By Thursday or Friday he might be ready to discuss with his chief further possible actions and priorities.

When the computer system was running, however inefficiently, he started Monday morning with a completed stock and sales list, albeit with scrawled changes. At the same time his boss had a summary and a weak stock list ready for the scheduled afternoon grilling. If that wasn't enough, their chief knew what was going on.

There was a saving in processing time and also a saving in stock levels because of the confidence that came from greater control.

But the greatest saving probably resulted not from the power of the computer, nor the enthusiasm and competence of the staff but from the fact that the computer could churn away whilst the rest of the world slept.

Editor's note: Gerald Everitt worked at Littlewoods Mail Order Stores from 1957 to 1961, and was the Computer Manager responsible for Development and Production during the last two years. His later career included a period as Director of the Computer Centre at Hatfield Polytechnic, now the University of Hertfordshire. Gerald can be contacted at .

| Top | Previous | Next |

Dear Editor,

When one of my friends and old colleagues passes away, I feel it is right to try to make some record of his achievements. John Clews found a niche in the computer field which he filled single-handedly and with distinction.

Most of his career was spent in the headquarters of the British Library at Harrogate during the period of computerising their catalogue. This is an enormous task for the major libraries. The data may be multilingual or even multi-script, and the records have to be sufficiently precise so search engines can pick out any item. The highly evolved Marc system permits libraries worldwide to share out the task, exchanging records electronically.

For Marc to function at all, characters must be coded unambiguously. Bibliographers made individual standards for this. At the same time computer workers were developing character coding standards. It is only a little exaggeration to say that the computer people regarded the bibliographers as myopic, and the librarians found computer workers arrogant.

Clews worked to form bridges between these two groups, to establish trust and respect. All the work he did was extremely diligent, thorough and reliable. Many years later the results of this excellent bibliographic work were built into the definitive Universal Character Set standard ISO 10646/Unicode.

He continued to advise libraries in many countries as an independent consultant, specially on the complex subject of writing Arabic with computers. He worked at this until shortly before his death at 55, on 16 November 2005.

Very sincerely,

Hugh McGregor Ross

Painswick, Gloucestershire

14 December 2005

Dear Editor,

Hugh McGregor Ross' piece1 regarding the paucity of computer applications in the first six years or so was most interesting. I wonder, however, if I might take up two related points.

The article says the Manchester Baby represented the invention of a stored- program electronic digital computer. I believe this should be the invention of a stored-program (read/write) electronic digital computer.

This is because the Eniac was converted during 1947/1948 (certainly before June 1948) by using an additional Function Table to store the program and in fact this was the mode in which Eniac was used for the Monte Carlo work. But of course the Function Tables were read only.

In this mode Eniac had storage for 1800 instructions and I think that there were 19 read/write stores of 10 decimal digits plus sign available for data. Additionally, of course, the Eniac had the advantage of good card reading and punching facilities so that cards could be used for certain types of calculation as intermediate storage.

So for many purposes Eniac was a very much more powerful computer than the Manchester Baby. The central step which this latter took was the introduction of a program where the instructions could themselves be altered during the course of computation. This advantage was vital for the long term future of computing, but does not take away from the fact that as a practical machine the Manchester Baby lagged far behind its predecessor. So dismissal of the Eniac seems rather unfair.

Secondly, both Colossus (wartime code breaking) and Eniac (a number of applications, including the Monte Carlo thermonuclear calculations of Metropolis and Frankel) performed extremely important work. Indeed one could argue that the work done by Colossus should rank quite high in all the tasks untaken by computers since 1943. So when it comes to usefulness, Colossus and Eniac between them certainly outrank the Manchester Baby and probably all the other early machines with von Neumann architecture as well.

Yours sincerely,

Crispin Rope

Suffolk

13 January 2006

by email from

1 "Finding the Necessity for Invention" by Hugh McGregor Ross, Resurrection 36 pp7-16.| Top | Previous | Next |

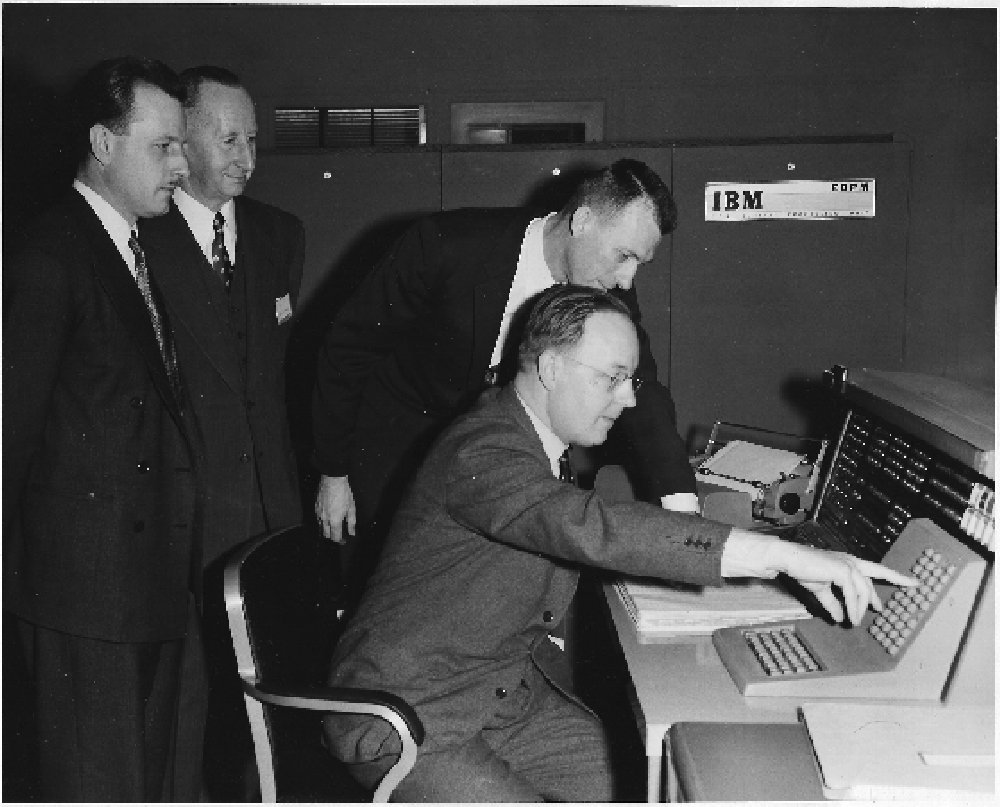

This picture, supplied by John Gearing, shows his late father Harold Gearing at the console of an IBM 702 at Poughkeepsie in New York state in 1956. The other people are identified on the back of the original photo as (from left to right) HA Smith of Metal Box Company; GJ Rebsamen, assistant secretary of Metal Box Company; and Mr Rayduois, IBM 702 Supervisor.

Editorial contact detailsReaders wishing to contact the Editor may do so by email to . Photos of early computers are very welcome! |

| Top | Previous | Next |

Every Tuesday at 1200 and 1400 Demonstrations of the replica Small-Scale Experimental Machine at Manchester Museum of Science and Industry.

9 March 2006 London seminar on "The Scientific Data Systems Story".

21 March 2006 NWG seminar on "Digital Circuit Design Styles". Speakers Chris Burton and John Vernon.

29 April 2006 All day London seminar on best practices in computer conservation at BCS headquarters in Southampton Street. Registration needed: see (dead hyperlink - http://www.ccs-miw.org).

4 May 2006 AGM, followed by presentation on "My mis-spent youth" by Iann Barron.

Details are subject to change. Members wishing to attend any meeting are advised to check in the Diary section of the BCS Web site, or in the Events Diary columns of Computing and Computer Weekly, where accurate final details will be published nearer the time. London meetings usually take place in the Director's Suite of the Science Museum, starting at 1430. North West Group meetings take place in the Conference room at the Manchester Museum of Science and Industry, starting usually at 1730; tea is served from 1700.

Queries about London meetings should be addressed to David Anderson, and about Manchester meetings to William Gunn.

North West Group contact detailsChairman Tom Hinchliffe: Tel: 01663 765040. |

| Top | Previous | Next |

[The printed version carries contact details of committee members]

Chairman Dr Roger Johnson FBCS

Vice-Chairman Tony Sale Hon FBCS

Secretary and Chairman, DEC Working Party Kevin Murrell

Treasurer Dan Hayton

Science Museum representative Tilly Blyth

Museum of Science & Industry in Manchester representative Jenny Wetton

National Archives representative David Glover

Computer Museum at Bletchley Park representative Michelle Moore

Chairman, Elliott 803 Working Party John Sinclair

Chairman, Elliott 401 Working Party Arthur Rowles

Chairman, Pegasus Working Party Len Hewitt MBCS

Chairman, Bombe Rebuild Project John Harper CEng, MIEE, MBCS

Chairman, Software Conservation Dr Dave Holdsworth CEng Hon FBCS

Digital Archivist & Chairman, Our Computer Heritage Working Party Professor Simon Lavington FBCS FIEE CEng

Editor, Resurrection Nicholas Enticknap

Archivist Hamish Carmichael FBCS

Meetings Secretary Dr David Anderson

Chairman, North West Group Tom Hinchliffe

Peter Barnes FBCS

Chris Burton Ceng FIEE FBCS

Dr Martin Campbell-Kelly

George Davis CEng Hon FBCS

Peter Holland

Eric Jukes

Ernest Morris

Dr Doron Swade CEng FBCS

Readers who have general queries to put to the Society should address them to the Secretary: contact details are given elsewhere.

Members who move house should notify Kevin Murrell of their new address to ensure that they continue to receive copies of Resurrection. Those who are also members of the BCS should note that the CCS membership is different from the BCS list and so needs to be maintained separately.

| Top | Previous |

The Computer Conservation Society (CCS) is a co-operative venture between the British Computer Society, the Science Museum of London and the Museum of Science and Industry in Manchester.

The CCS was constituted in September 1989 as a Specialist Group of the British Computer Society (BCS). It thus is covered by the Royal Charter and charitable status of the BCS.

The aims of the CCS are to

Membership is open to anyone interested in computer conservation and the history of computing.

The CCS is funded and supported by voluntary subscriptions from members, a grant from the BCS, fees from corporate membership, donations, and by the free use of Science Museum facilities. Some charges may be made for publications and attendance at seminars and conferences.

There are a number of active Working Parties on specific computer restorations and early computer technologies and software. Younger people are especially encouraged to take part in order to achieve skills transfer.

| ||||||||||