David Hartley

| Resurrection Home | Previous issue | Next issue | View Original Cover | PDF Version |

Computer

RESURRECTION

The Bulletin of the Computer Conservation Society

ISSN 0958-7403

Number 52 |

Autumn 2010 |

| Chairman’s Report | David Hartley |

| Society Activity | |

| News Round-Up | |

| The Bloomsbury Group | Dik Leatherdale |

| More Recollections of ALGOL 60 | Tim Denvir |

| The Multi Variate Counter (MVC) | Andrew Colin |

| MVC - Life After Death | Dik Leatherdale |

| Letter to the Editor | Alan Thomson |

| Obituary: John Aris | John Aeberhard |

| Forthcoming Events | |

| Committee of the Society | |

| Aims and Objectives |

| Top | Previous | Next |

|

David Hartley |

A new CCS Constitution was approved at an Extraordinary General Meeting on 15 October 2009. The Society’s year now runs from October to September. This report covers the extended period May 2009 to September 2010.

Technical progress in the various CCS projects is reported regularly in Resurrection.

The Turing Bombe and Colossus continue to be major attractions at the Cryptography Museum and the National Museum of Computing respectively at Bletchley Park. Both rebuilds have been maintained and further developed under their respective leaders who, with their teams of volunteers, have done much to uphold the objectives of the CCS.

The SSEM (The Baby) at the Manchester Museum of Science & Industry (MOSI) has spent much of the year in storage while the museum undertakes major rebuilding. The Baby will be back on display to the public before the end of 2010. In the meantime, the volunteers who support the rebuild have turned their attention to restoring the Museum’s Hartree Differential Analyser. This new CCS project is being led by Charles Lindsey.

The Pegasus at the Science Museum has had a less fortunate year. In July 2009 a small fire broke out in the power supply unit causing major concern both to the project and to the Museum authorities. Health and safety considerations required a prolonged and major effort to report on the state of the machine, including consideration of the viability of Pegasus as a working exhibit. As the oldest working computer in the world, and noting that another static Pegasus is preserved at MOSI, it was eventually agreed to the machine being re-restored. Fortunately, this did not prevent us from celebrating the 50th birthday at a special seminar in May 2010. We are optimistic that Pegasus will be back in steam in 2010-11.

After five years sterling service, Arthur Rowles stepped down a leader of the Elliott 401 project, his position being taken over by Chris Burton. Unfortunately further progress has been inhibited by fall-out from the Pegasus problem, and the need for the Science Museum to review the health and safety implications of both machines. We hope for resumption of restoration work in 2010-11.

The ICT 1301 project located at Pluckley in Kent continues to make steady progress, despite an attempt by the local authority to classify the machine’s location as qualifying for business rates. A sustained campaign supported by both the BCS and the BBC won the day.

Following many years research by CCS Secretary Kevin Murrell, agreement was reached with the Birmingham Science Museum to move the Harwell Dekatron Computer to the National Museum of Computing to facilitate its restoration. A new CCS project, the work is being led by Tony Frazer and is progressing at an impressive rate. On completion, it is likely to oust the Science Museum’s Pegasus as the world’s oldest working stored-program computer.

Dik Leatherdale, who took over in 2008 as editor of Resurrection, has continued to give the CCS the benefit of his enthusiasm and expertise. Under Dik’s leadership, Resurrection is appearing at a steady rate of four issues a year, and remains the CCS primary record of its activities as well as being an outlet for much historical material.

During the last year, we have reviewed whether there was scope for a more scholarly outlet in our area of activity. To this end, we approached the Editor- in-Chief of the Computer Journal, the major and fast-growing BCS journal in computer science, with a view to exploring whether he would have an interest in publishing peer-reviewed papers on computer history. The suggestion was met with instant enthusiasm, and immediately three papers associated with the celebration in 2009 of the 60th anniversary of the Cambridge EDSAC were accepted. Although published online, they have yet to appear in print.

As part of the 50th birthday of the Science Museum Pegasus, Alan Thomson has masterminded the re-publication of the CCS booklet on Pegasus written and recently updated by Simon Lavington.

Alan Thomson has continued for a further year as website editor, being successful in ensuring that the site remains up to date. Alan is also working on achieving a degree of integration between the CCS and the BCS websites.

The 2009-10 London events programme, ably managed by Meetings Secretary Roger Johnson, continued to inform and entertain members and guests over a range of topics. Specific events worthy of special mention were an afternoon of Historic Computer Films, a celebration of 50 years of ALGOL (in a meeting jointly organised with the BCS Advanced Programming Group), and an event to mark the 50th birthday of the Pegasus in the Science Museum.

Events organised by the North-West Group included An Overview of Some CCS Projects by Chris Burton, and we arranged for this to be repeated at a joint meeting with the BCS Edinburgh Branch later in the year.

For many years, the North-West meetings have been organised by the long-serving Ben Gunn. Ben decided to retire in 2010, his duties being taken over by Gordon Adshead. The Society is grateful to Ben for 17 years of service to the Society.

We continue to be grateful to our two associated museums in London and Manchester for acting as hosts to CCS meeting programmes.

Contact detailsReaders wishing to contact the Editor may do so by email to |

| Top | Previous | Next |

Pegasus Project - Len Hewitt

The Museum decided to restore Pegasus to working order again which was a great relief. There were a number of conditions. The Pegasus had to be deemed safe to use by an external consultant. The Pegasus had to be cleaned using external volunteers. The Slydloc fuses in the PSU which contained small amounts of asbestos had to be replaced. The burnt wiring had to be replaced. A consultant was chosen and a meeting was held on 7th July at which we discussed the way forward. I supplied a CD with copies of all the Pegasus Manuals for the consultant’s use. We were allowed access to the machine on 10th August and we met the volunteer to discuss cleaning methods. Fortunately the conservator in charge of the cleaning, Ian Miles, listened to our suggestions as to how the cleaning should be done. In fact cooperation with Ian has been excellent.

I cleaned the PSU which was the dirtiest part of the machine before starting to replace burnt wiring.

I have attended six sessions aided by Peter Holland. After a number of visits we have progressed but not as far as I would have liked. Some problems with identifying wiring remain. The motor-driven margin rheostats were a later addition to Pegasus and are not documented at all. Progress is being made. We have not had any further discussions with the selected consultant.

I am hoping we can have power on the machine by early November.

Software Conservation - David Holdsworth

Bill Findlay is hoping to release an alpha version of his KDF9 emulator soon for use on a Mac with Snow Leopard. I am working with him on producing a GNU/Linux version. We hope to have a release which includes binary programs for both systems. We believe that there are some people in the world using a system called Windows, and we intend eventually to produce a binary program for it.

The release will also include source code. It is written in Ada 2005.

A reminder of the link for CCS KDF9 stuff: sw.ccs.bcs.org/KDF9. A link to the emulator release will appear there in due course.

Elliott 803 Project - Peter Onion

Not much to report from a hardware perspective as the machine has been running well all summer. On a few of the very hottest Saturdays the machine was only run for a few hours during the cooler conditions of the morning. In the last few weeks of August the temperature has fallen and one core store amplifier has consequently required an adjustment. The only other hardware problem has been a fault in the floating point logic which was caused by yet another dead OC 84 transistor.

The ″803 programming challenge″ run over the weekend of the Vintage Computer Festival only attracted a small number of entries, but they all functioned correctly.

TNMOC has received an offer of a second Calcomp 565 drum plotter, complete with pen assembly and even some original pens! This plotter was originally connected to an Elliot 503 so it carries “ELLIOTT OEM” markings. Contrary to an earlier report, the logic to decode the 72 instruction used to control the plotter is present in our 803. This means an interface can be constructed that connects to the 803 CPU in exactly the same way as the original plotter interface. The first step has been to recover some tapered pins for insertion into the peripheral connection blocks in the 803 cabinets. The interface will also provide an RS-232 output to allow plotter movements to be captured.

Harwell Dekatron Computer Project - Tony Frazer

I gave in to temptation and started work on the arithmetic rack starting with the pulse generator unit. First of all I vacuumed and wiped down the unit. All valves were removed, tested (where practical) and re-seated in their holders. Several showed a low mutual conductance and were replaced with known good examples. A loose wire (for V1 anode supply) was soldered back in place. Some in-circuit testing of passive components and diodes was also carried out with no problems found.

Dick Barnes, one of the designers visited us. Together with Eddie and Ros, orders were successfully entered using the manual keys on the control panel. The trick was to enter a space character before keying the order. It was noticed that the indicator lamps and relays of the sending and receiving stores were operating, more or less as expected.

Using the above technique, Eddie and Johan were able to observe the Send and Receive relay groups and the store select relays operating. We also identified numerous poor connections to the 6v lamps which were rectified by further cleaning.

The Hartree Differential Analyser - Charles Lindsey

Funding for this project has provided by the BCS (£1000 to enable us to get started) together with two organisations that the Museum approached. The Idelwild Trust and the Goldsmiths Company Charity each came up with £2000 each. This should facilitate the interpretation of the machine to the public, and maybe even some enhancements beyond our initial goals.

Much time was spent in negotiating for a three-phase supply (now working) and in establishing the location of missing parts. We now believe we know where they all are, but cannot get at them because the aisles of the off-site storage site are clogged with bits of aircraft that were supposed to be stored elsewhere.

Work completed so far:

Further work needed to complete Phase I (which is to demonstrate that the machine is potentially viable) -

As to Phase II (which is to make the machine safe and practical to use), that is another story for now.

|

|

Dik Leatherdale adds -

The photo of the Differential Analyser in Resurrection 51 drew responses from three readers all of whom drew on Froese Fisher’s biography of Hartree. First up was the ever-resourceful Martin Campbell-Kelly who identified the people as (left, front to back) Jack Howlett, Nicholas Eyres, and J.G.L. Michel. On the right are Hartree and Phyllis Lockett Nicolson, who is known for Crank- Nicolson method for the numerical integration of partial differential equations. John Deane and Mary Croarken both came to the same view, with Mary estimating a date between 1941 and 1943 for the photo. She should know. She also worked on the machine under Hartree.

Not Klaus Fuchs after all then. But Fuchs does cross our path later, for it was he who recruited Jack Howlett to Harwell in 1948.

ICT 1301 Project - Rod Brown

Ongoing work at the 1301 resurrection project has allowed us to prepare and deliver another public open day on 11th July. We had about 300 visitors and made lots of new contacts. After the event we invited several visitors back for a more technical day on 4th August. If I include a visit by Erik Baiger from Germany and a planned visit from another group later this year, we will have completed another four open days by the end of 2010. We will also be delivering a presentation to the BCS (Kent Branch) AGM meeting about the project in September.

Now work is focused on finalising the extra Drums attached to the machine. There is a practical side to this exercise for, if ever our original Primary Drum fails, we would need a proven working drum to press into service to supply the initial bootstrap code and give the machine a source of clock signals to drive the CPU. Actual working 1301 machine code on the drum is minimal at present and is probably less than 2,000 words in total. This represents the original initial orders (bootstrap code) and the demo program for the system as well as some of the simple test and data link programs to load and exercise the CPU.

Reliability of the CPU is continuing to increase with time, as the system reawakens after its long slumber. The owner has remarked several times this year that he is able to just turn the system on and load and run development coding with no apparent faults during the warmer summer period. This is a far cry from the early days of the project where it was not even possible to execute more than three instructions in sequence from the command console hand keys.

2012 is not only the year of the UK Olympics but also our machine’s 50th anniversary ,so we are planning a form of celebration in that year. For news of this and ongoing project work visit the CCS website or the dedicated project website at ict1301.co.uk

Our Computer Heritage - Simon Lavington

Technical data has been uploaded for the EMIDEC 2400. A date has been resolved for an EMIDEC 1100 delivery. There is clearly much more work to be done on EMI computers. Failing the appearance of a more knowledgeable expert willing to help, I intend to visit Wroughton when time permits, to browse the EMI technical manuals held in the ICL Archives there. It is believed that Alan Thomson and others also have (copies of) original EMI source documents.

It has been realised for some years that the whole of the OCH website needs (at the very least) a spring clean, a new front door and see-through windows. The larger OCH project, as envisaged in 2003, is clearly not going to happen. (It may be remembered that our Museum partners had agreed to take over the task of applying for funding - so far with no noticeable success.)

The Pilot Study has become, by default, the only useful part of the exercise. This part needs to be transformed into an attractive, easy-to-use website. Whether, and how, it should be extended to embrace the computers of other UK manufacturers is a matter for debate. As it stands, the Pilot Study sets out to describe the great majority of commercially-available, pre-1970, British computers. This embraces the output of six companies/groups, namely: Elliott/Elliott-Automation, EMI, English Electric, Ferranti, Leo, BTM/ICT/ICL. A further six UK companies, namely AEI, Computer Technology Ltd., Digico, GEC, Marconi and STC, have not yet been considered within the chosen time-frame. Knowing the difficulty of attracting knowledgeable volunteers who are willing to research and compile technical data, it is recommended that we should concentrate on filling the gaps in the existing material for the six larger companies rather than attempt to add more companies. In any case, the six larger companies account for at least 95% by number of British-designed production computers installed up to 1970, and probably a larger percentage of these installations by value.

The Pilot Study (or similar) might in due course be extended to include computers of the 1970s and 1980s but, given the priorities imposed by fading personal memories and scarcity of source documents, it is recommended that extension be postponed until the original task is properly finished.

Bombe Rebuild Project - John Harper

Throughout this summer we have been able to put on public Bombe demonstrations almost every Sunday and many Saturdays plus Bank Holidays. During the demonstrations the machine is fully working and stopping on genuine stops. To do this everything has to be 100% with nearly 4,000 drum to commutator contacts having to be correct at every test and all these changing up to around 17,000 times during a run. Early demonstrations were given with the machine just going around and no sensing active, on the basis that few visitors know the difference. However the operators knew. To run live demonstrations gives them more confidence and a more tangible operation to describe.

We are about to produce a new schedule to provide demonstrations at weekends up to the end of the year. We have just about enough people trained to do this so further training is not planned until towards the end of this calendar year.

In my last report I said that we were starting on the process of handing over demonstration scheduling to Bletchley Park (BP) staff. Well this turned out to be a dismal failure. With hindsight perhaps we were a little premature in attempting this. We don’t have any plans to try involving BP staff again in the short to medium term.

There is a general problem that BP management does not have enough people with the necessary skills to take over any of tasks involved in looking after our machine.

There are still no visible plans as to how the museum and site might develop. Meanwhile our rebuild team continues to disband. It has not completely collapsed because there are special technical investigations going on into how the machine was used during WWII. However, whereas we regularly met on a Tuesday with about five rebuild team members present we are now down to two or three and we no longer meet every Tuesday. Document tidying continues off-line for a possible handover to somebody in the future, but being in limbo regarding the future at BP there is little incentive to do this with any enthusiasm.

One thing that might be a small light on the horizon is that I have been invited to join a technical advisory committee. However this consists of technical experts, not all living close to BP so it is hard to see how this will operate. It is intended to cover the whole area of technical activities during WWII and not specifically planning the future of the BP museum. There is no date as yet for the first meeting

Our website is still at https://www.bombe.org.uk/

The Society has its own Web site, which is now located at www.computerconservationsociety.org. It contains news items, details of forthcoming events, and also electronic copies of all past issues of Resurrection, in both HTML and PDF formats, which can be downloaded for printing. We also have an FTP site at ftp.cs.man.ac.uk/pub/CCS-Archive, where there is other material for downloading including simulators for historic machines. Please note that the latter URL is case-sensitive.

| Top | Previous | Next |

CCS founder Doron Swade was interviewed for BBC Radio 4’s new series Fry’s English Delight in which Stephen Fry investigated the mysteries of the QWERTY keyboard layout. The programme was broadcast on 4th August. Look out for the repeat.

-101010101-

Sad to report the demise of two contrasting figures from the history of computing. Arthur Porter, who passed away a few months short of his 100th birthday, began building the well-known Meccano Differential Analyser as a third year undergraduate at Manchester, continued during his MSc and, for his PhD, helped design, commission and use the full-size machine (now split between MOSI and the Science Museum). In a two year spell at MIT he assisted in the design of Vannevar Bush′s Mk II DA. He saw the piece at MOSI in 2004 but sadly missed hearing of the more recent CCS project to restore it.

Nancy Foy was perhaps best known for her 1975 book The Sun Never Sets on IBM. She was also editor of Dataweek from 1969.

-101010101-

A permanent record of the 1998 celebrations of the 50th anniversary of the Manchester Baby computer can be found at www.computer50.org. The late Brian Napper was the creator of most of the material there which was substantially added to over the years until his death in 2009. Toby Howard of Manchester University’s School of Computer Science has recently taken over responsibility for this valuable and fascinating resource and can be contacted at toby.howard@manchester.ac.uk.

-101010101-

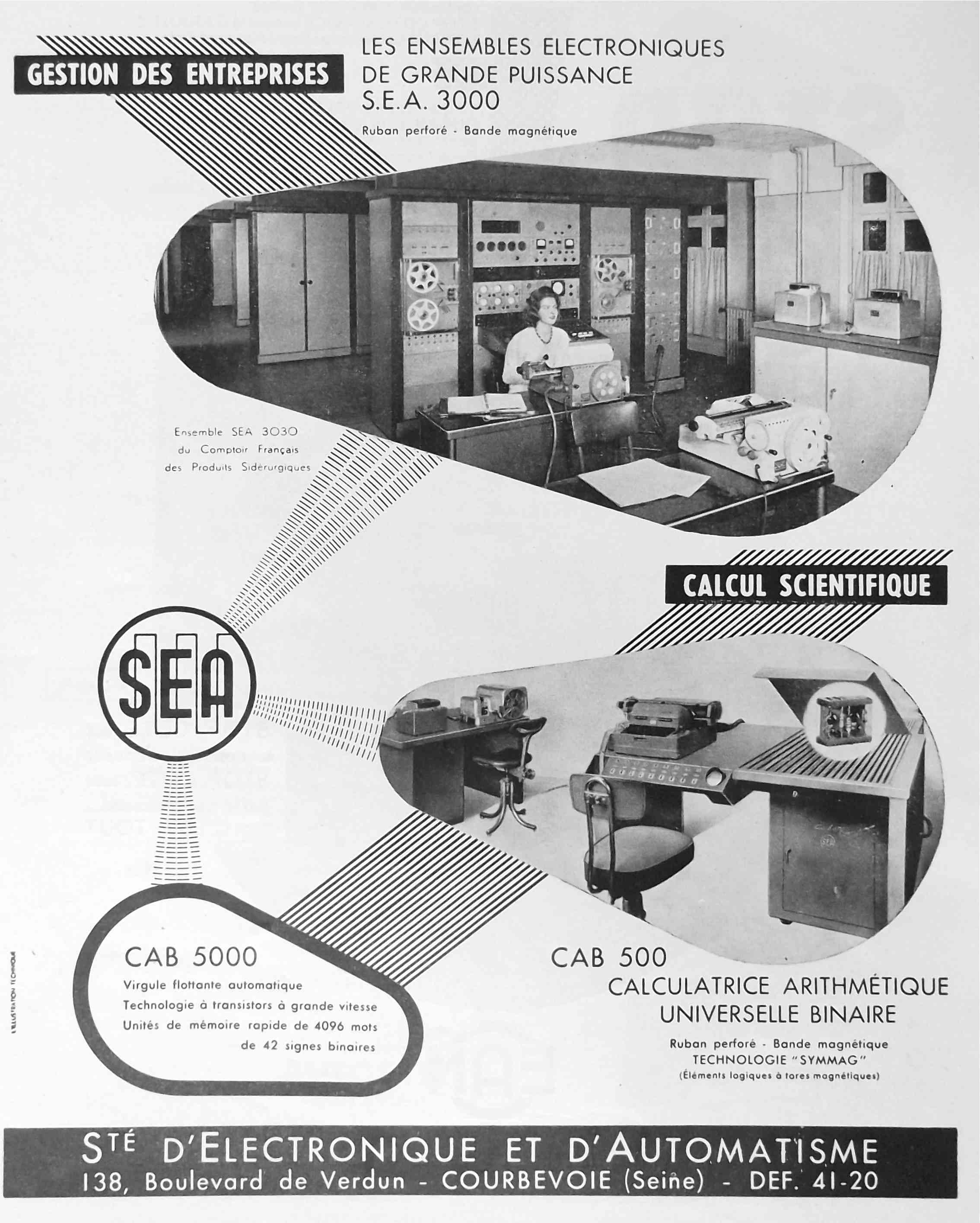

Paris in September saw a short exhibition celebrating the 60th anniversary of the first calculators produced in France by the Société d’Électronique et d’Automatisme (SEA). See sea.museeinformatique.fr.

Pierre Mounier-Kuhn (mounier@msh-paris.fr ) of the Sorbonne is our contact.

SEA produced a number of innovative machines in the 1950s and early 1960s but merged with CII in 1966 and then with Bull in 1975. Further information about SEA was published in a paper entitled “Une adventure qui se termine mal” by F.H. Raymond, the founder of SEA. It can be found at jacques-andre.fr/chi/chi88/raymond-sea.html. Google will translate for you, if you ask it nicely.

|

A 1959 advertisement for SEA products |

| Top | Previous | Next |

Readers will notice a certain theme running through this edition of Resurrection. All three lead articles concern work done at the University of London in the 1960s and a few years spanning either side. Difficult to believe, I know, but I didn’t plan it that way, it just happened. But I must plead guilty to compounding the problem by adding a third article to the two I already had.

Most of the work described in this edition took place in Gordon Square in Bloomsbury or within a few moments walk of it. Here in an attractive Georgian terrace was housed the Institute of Computer Science and its companion service bureau Atlas Computing Services Ltd., both based around the London University Atlas. The terrace had been home to Virginia and Leonard Woolf, Clive and Vanessa Bell, John Maynard Keynes, and Lytton Strachey. More famously from our point of view, Christopher Strachey (who was Lytton’s nephew) grew up there.

When I first went to work in Gordon Square the London Atlas was in full swing with the early difficulties sorted out and a huge throughput of work going on every day, seven days a week, 24 hours a day. Planning for the replacement CDC 6600 half a mile away was well in hand. That machine and its successors would serve the university community for many years as one of the three regional centres proposed in the Flowers Report of 1966.

But London University was and is an unusual organisation. Provision of a central computing facility was all very well but, such was the autonomy of the member colleges, they soon started to declare independence in a riot of incompatibility. Just across the Square, University College ran a large IBM 360, Imperial acquired a CDC 6400 and QMC an ICL 1905F later replaced by a 2980 with a DAP. Somehow, it all seemed to work. Goodness knows how.

No Pioneer Profile in this edition. Blown off course. Hope you enjoy it anyway.

| Top | Previous | Next |

In the early 1970s, many of the software techniques we now take for granted were in their infancy. Writing compilers in high level languages, sometimes in themselves, usually in a machine independent manner, all were regarded as cutting edge technologies. David Hendry’s team, sprung from London University, combined these methods and more. Tim Denvir, remembered by your editor as seriously clever but friendly, tells the tale.

Reading Resurrection 50 brought back many pleasurable reminiscences. I joined Elliott Brothers in 1962 as a new graduate, when the Elliott ALGOL 60 compiler was being developed. I was not a member of the ALGOL 60 compiler team, and I felt slightly envious of the exciting activities going on in the room next door. But the Scientific Computing Division had a tradition of internal talks, along the lines of academic seminars, in which members of a team would explain the latest ideas they were using in their work. This tradition was most valuable in raising the morale of all and in maintaining a sense of being in the technical avant garde.

I later migrated first to the University of London Atlas Computing Service and then to RADICS, Research and Development in Computer Systems, a company started by David Hendry from London University’s Institute of Computer Science. RADICS specialised in writing compilers, using a front end-back end technology which, while fairly commonplace now, was new at the time. By using a standard intermediate language, one could port the front end for a high level language to a new target machine by writing a back end which converted the intermediate code into the machine code of that target machine. This produced an economy of scale: for n high level languages and m machines one had n + m pieces of work to do rather than n x m. Furthermore, all the front and back ends were written in a customised language, BCL, that David had designed for the purpose. BCL was itself developed using the same technique, so that all front ends could also be ported onto a new host as soon as a new back end was written.

So it was that in 1970 I found myself producing some ALGOL 60 front ends, continuing some initial work that David had done. I say some front ends, plural, because by then ECMA had defined a number of levels of ALGOL 60, and we produced front ends for level 0 and 2, if I remember correctly. Honeywell and ICL Dataskil, a software house subsidiary of ICL, both required a compiler for the higher level of ALGOL 60, and we began to work on that one first. This required all the awkward features of recursion, call by name, block structure and so on.

|

Digico Micro-16 |

I had an able assistant, Pat Whalley, who always managed to grasp and implement my ideas with quiet efficiency despite my frequent lack of lucidity in explaining them. RADICS had an in-house minicomputer, a Digico Micro-16, with all of 8k of main store, and it was for this machine that the ECMA level 0 compiler was required. My initial idea was to build the more complex compiler front end first, and then so to speak produce a stripped down version for level 0. But level 0 of ALGOL 60 was so stripped of the more sophisticated features that it was essentially a different language, despite having the same syntax. It was better and easier to start pretty much from scratch and knock off the level 0 front end as a separate exercise. Another team was producing the back ends for four machines, including the London Atlas. Yet other teams produced the front ends for BCL and Fortran. Porting the BCL and the back ends from Atlas to the Digico machine seemed to go without difficulty. The RADICS compiler technology included a format for communication between the front and back ends; paper tape was still the most frequent hard data medium at the time, but formats for paper tape were only just being standardised. Developing software that was intended to be easily ported between different machines was still unusual, and machine- independent media and formats were not the order of the day. Fortunately all the different paper tape formats at least agreed on the representation of decimal digits, so “decimal coded tape” was used as a carrier for the intermediate language etc.

I perused Randall and Russell’s ALGOL 60 Implementation and other texts, and then used them as I do a recipe: as a source of principles rather than a strict rule book. I also borrowed one technique that I recalled from the Elliott ALGOL 60 team. Within an ALGOL 60 program there is great opportunity for forward references: one may encounter an identifier and not know to what it refers, even if a declaration of it has already occurred, until much later in the source script. So most ALGOL 60 compilers did their work in two, or possibly more, passes. A rather inefficient method was to read the actual source twice. For a long program on paper tape, this would have required rewinding the tape (often with the danger of generating static electricity and causing problems in the tape reader), with the consequent delay. With the Elliott technique, the first pass would output intermediate information in a format private to the compiler onto paper tape, and read it back in reverse order in the second pass. In that way no rewinding was required. Furthermore, it was actually easier to use the most recently produced information first in resolving forward referencing ambiguities. I used the same technique, using main store to hold the inter-pass information until it ran out, then sending it out to paper tape. In this way small but significant programs could be compiled in an apparent single pass, even on the 8k Micro-16.

I was very interested to read in Brian Randell’s article in Resurrection 50 that Dijkstra’s X1 ALGOL 60 compiler signalled the first error that it found and then refused to carry on any further. I can confirm that recovering from an error in the source text so as to continue accurately processing the remainder was particularly awkward, but I believe we achieved a reasonable compromise in doing so. But at least half the text of our front end was concerned with error reporting and recovery. ALGOL allows labels and names of the somewhat notorious switch blocks as values that can be passed as parameters to procedures. Compiling goto statements in the context of recursive procedures was particularly tricky. Without goto statements the size of the front end would have been halved again.

We delivered the Digico Micro-16 compiler successfully. As the work for level 2 front end and the two backs ends for Honeywell and Dataskil was coming to a conclusion, RADICS was forced to close. There was a recession in 1971 and computer service firms were the first to go. We were seeking a take-over, but at the last moment the would-be buyer declined and offered jobs to the six members of staff they liked the look of most and redundancy terms to the rest. Those involved in the ALGOL 60 work were keen to complete the project. Together we approached Honeywell to finish the job as an independent group of four individuals. Honeywell were not all that enthusiastic and offered us terms that amounted to about 50% of our accustomed salaries. I suspect that the provision of an ALGOL 60 compiler was a matter of disputed internal policy for them, hence the limited terms. After a dispirited discussion amongst ourselves, we agreed not to continue. I went to the Honeywell management to convey the news, and they seemed in no way disappointed.

The attitude from ICL Dataskil could not have been more different. Dataskil’s John Chilvers was enthusiastic that we should continue, and he arranged premises for us in a room in Imperial College, organised a link to the Cambridge Atlas to host our development work, and generally secured our continuation for a reasonable fixed price. Once again, as a result of David Hendry’s underlying architectural ideas for compiler portability, porting all the work onto the Cambridge Atlas was seamless. We finished the job, delivered the compiler and prepared for acceptance tests. There were many sample ALGOL 60 programs published which were intended to test a compiler’s conformance to the more abstruse aspects of the language’s semantics. I had checked out a lot of these already. The ICL acceptance test consisted of running a suite of them and comparing the results with those from an existing ICL ALGOL 60 compiler. There was only one discrepancy, and I was rather pleased to point out that it was the ICL compiler that was producing the wrong result.

I still have notes and design papers relating to that work. It feels decidedly strange to realise that we did it all 40 years ago. I would like to acknowledge the parts played by those who worked on the associated back-ends, Clive Jenkins, Mary Lanch and Mary Lee; Pat Whalley who added programmer-power in the implementation of the front-end; and David Hendry whose ideas for the architecture of portable compilers customisable to languages, host and target machines underpinned the RADICS ALGOL 60 compilers as well as the other several compilers that RADICS produced.

Editor’s note: Tim Denvir started his career in electronics at Texas Instruments but, after reading Mathematics at Cambridge, migrated to systems programming at Elliott Bros. in Borehamwood. A position as Chief Systems Programmer at ULACS preceded the ALGOL 60 work at RADICS described above. Then, after a brief spell at ICL Bracknell, Tim joined STL/ITT Europe, first managing the OS for the ITT 3200 and later concentrating on Formal Methods. Tim finally spent some 20 years in consultancy, first at Praxis and then in his own company, Translimina. He can be contacted at timdenvir@bcs.org.

| Top | Previous | Next |

In 1960 to 1965 I was a Lecturer at the University of London Computer Unit. We had a Ferranti Mercury computer which supplied the bulk of the computing needs of London University. This is an account of the development of the Multiple Variate Counter, a Survey Analysis suite I wrote during this period. The system went through two generations: first for the Mercury, and later for the Atlas.

Before the advent of computers, the chief mechanical aid for survey analysts was the punched card sorter.

Punched cards, as a means of recording data, were introduced by Hermann Hollerith to help analyse the 1890 census in the USA. The standard punched card had 80 columns, each capable of holding a hole at one or more of 12 positions. For numerical work only the lower 10 holes were used; they were called 0 to 9.

Survey records and questionnaires of the time usually included card punching instructions so that the answer to any question would always be located in the same column, in the same format. Part of a questionnaire about prisoners might read

| Type of Offence(col 12) | Educational Attainment (col 13) | |||

| Burglary Assault Drunk in charge Fraud Armed robbery Bigamy Drug dealing Dangerous driving Manslaughter Murder |

(0) (0) (1) (1) (2) (2) (3) (3) (4) (4) (5) (5) (6) (6) (7) (7) (8) (8) (9) (9) |

Illiterate Elementary Advanced First degree Higher degree |

(0) (0) (1) (1) (2) (2) (3) (3) (4) (4) | |

The questionnaires were initially filled in with crosses or numbers in the printed boxes, and then transferred to punched cards according to the layout instructions.

The information on a set of cards punched to such a format could be extracted with a card sorter. A pile of cards was loaded into a source hopper, a selector was set to a chosen column, and the machine started. The machine would rapidly sort the cards into 12 destination hoppers (one for each row) depending on the hole punched in the selected column. To watch, it looked like magic. Each hopper kept count of the number of cards it received. Given (say) 1000 criminals, you could quickly produce a table like

| Type of offence | |

| Burglary Assault Drunk in charge Fraud Armed robbery Bigamy Drug dealing Dangerous driving Manslaughter Murder |

340 77 107 41 34 5 117 212 58 9 |

The production of a table which involved relationship between two (or more) questions was much more laborious. If you wanted to investigate the correlation (if any) between the type of offence and the prisoner’s educational attainment, you would first have to sort all the cards by column 12, and then the contents of each hopper, separately, by column 13. This required much patience, and accurate recording of numbers that appeared on mechanical counters. Handling numbers of several digits was not practicable, and when raw totals were recorded, the investigator still had to calculate statistics by hand. A further problem was that complex surveys required the cards to be passed through the sorter hundreds of times. Sooner or later, the cards would sustain damage, lose data and clog up the mechanism.

In spite of their drawbacks, cards and card sorters remained in widespread use for 80 years. Until around 1975 computers even used standard cards as an input medium. Much of the earliest crystallographic work was done with their aid, and card sorting was one of the services offered to the University by the Computer Unit.

Early computers, even with their limited storage facilities, had obvious advantages over card sorters. You could easily check for omitted answers, handle multi-digit numbers, and extract the data needed for relationships between several questions. The data could still be handled one record at a time. When all the data had been read the machine could calculate and display the statistics. The cards would only need to be read once.

People have sometimes asked me, “why MVC?” 1960 was also the year I got married, and people acquainted with my wife may recognise the initials.

MVC was defined as a simple specification language.

The first section of an MVC program described the layout of the data on the card.

The format of each field on the card could be specified as one of

Numeric (spanning one or more columns) Yes/no (what we would now call Boolean) Polylog (this specified a field of several hole positions, of which just one was selected)

The second part of the program defined the statistics needed. These included simple counts, averages, standard deviations, contingency tables with chi- squared values, and averages tabulated by different values of polylog variables. For example, you could generate a table that gave the average ages of Labour, Liberal and Conservative voters.

The data was still supplied on cards, but the program and output both used 5- hole paper tape.

Both versions of MVC were written entirely in assembly code. When I started work on the project our Mercury was booked up for 24 hours every day. I managed to secure a daily slot of 15 minutes to develop and test the program, but my regular time was 2:15 to 2:30 a.m. For a couple of months I slept at the Computer Unit, being woken at 2:00 o’clock for my run. This eventually ended when the machine was taken out of service for a week for the engineers to “pull up the waveforms” (which is what engineers did in those days). They only worked during the day and let me use the computer all night. By the end of the week the system was ready.

I wrote and circulated a User’s Manual, but most of my clients didn’t look at it until they had already punched their data on to cards. The data was often ambiguous and unusable. I remember an interview with a lady of formidable appearance doing research on students’ success rate at University. She explained that each card held data for a single student. Column 22 held a 1, 2, 3 or 4 depending on the class of degree awarded.

“What about students who don’t complete the course? “I asked.

“Oh that’s easy” she replied. I use column 22: I punch 1 if they leave after one year, 2 if they leave after two years, and so on.”

“But” I said diffidently. “How do you expect the computer to tell apart a student with First-class honours from one who is sent down during the first year?”

The lady looked at me with scorn. “That’s a silly question. I use green cards for students who fail, and white for the others.”

MVC received a lot of use for sociological and medical research. I helped the users, and generally ran the programs myself, since the policy was not to let unqualified users anywhere near the precious machine.

One application influenced my life. It was a time when the link between smoking and lung cancer was just emerging, and I ran a survey which investigated the connection. As the tables were revealed by the clanking teleprinter, the cigarette fell out of my mouth and I have never smoked again.

In 1963 the Computer Unit decided to upgrade to a Ferranti Atlas computer. This was the super-computer of the day, completely transistorised, with 32K of RAM, a virtual memory system, magnetic tape drives, an operating system that could run many applications at the same time, and a generous order code that included a huge range of data handling operations such as string manipulation and mathematical functions. Data could be stored on magnetic tape. At the time of our decision the prototype Atlas was under development at Manchester University.

MVC was a standard utility offered by the Computer Unit, and it was clear that we should transfer it to the Atlas. We also had the opportunity to improve the system, making full use of the better facilities on the new machine.

At the time, the preferred language in UK academic circles was ALGOL 60. The MVC Mark 5 specification language took over the lexical rules of ALGOL. In the data description section, users could now supply identifiers both for the variables and for the sets of possible answers to polylogs. Raw data from cards was defined in statements like:

| raw numerical; | ||

| income,3,5; | (i.e., 5 digits starting in column 3) | |

| raw binary; | ||

| degree, 11/0; | (column 11, row 0) | |

| raw polylog; | ||

| politics,14/1,6, | tory,liberal, labour,other, dont_know, wont_say; | (column 14 rows 1-6) |

Sets of related data items could be grouped into vectors.

An important new feature was the ability to form derived variables. An ALGOL- like expression syntax let the user define variables as arbitrary functions of raw variables. The syntax included a wide range of mathematical functions such as logs, and logic functions with multiple boolean arguments, including all, some and none. This example is a coding of Duboy’s formula1 :

skin_area := 71.84*exp(0.425*log(weight)+0.725*log(height));

The data format was fixed, but allowed for many data items to be omitted if they were not relevant. If a subject did not own a car, there was no point in asking about its year of manufacture. On the other hand, certain omissions, or certain combinations of values could render the record invalid, and cause its rejection, with a suitable report. The boolean existence function E was true if its argument was defined.

MVC provided ‘checking statements’. Examples are

accept (AGE > 20);

reject (time_in_present_job > age - 13);

Data might be rejected because of punching errors, or incorrect or frivolous data supplied by the subject.

Table definition followed a few simple rules:

For example, the definition

title Family size of graduates and non-graduates;

include (married or widowed);

tabulate by has_degree;

mean number_of_children;

stdev number_of_children;

might generate

<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<< < < < FAMILY SIZE OF GRADUATES AND NON-GRADUATES < < < < < < DEGREE NO DEGREE TOTAL < < < < TOTAL 117 1389 1506 < < < < MEAN NUMBER_OF_CHILDREN 3.72 3.51 3.53 < < < < STDEV NUMBER_OF_CHILDREN 1.73 2.50 2.44 < < < < < <<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<

MVC also handled measures of association. For example, the command

chisquare age_group, marital_status;

would display the chi-square statistic for the contingency table between age_group and marital_status, and a significance marker that indexed a built-in table for the correct number of degrees of freedom. Product-moment correlations could be specified by a similar mechanism.

The same commands (chisquare and correlation) could be used on lists or vectors of variables, producing triangular matrices of results that could spill over several pages of lineprinter paper.

The magnetic tape drives offered allowed MVC users to keep their data on the machine permanently. They could add more data at any time, fix reported errors, and ask for additional tables, all without have to read in the entire data set each time.

The implementation of MVC Mark 5 turned out to be a traumatic experience. The group working on the prototype machine in Manchester agreed to run our paper tape programs “when they had time”. I set to work, wrote a few thousand lines of assembly code, and posted the tape to Manchester. After a few days it was returned, with, of course, a list of syntax errors. This continued for some time to my increased frustration. On the worst occasion, my tape was returned after a fortnight. It clearly had been dropped and trampled on, and it came back with a note saying “Won’t go through reader”.

In desperation, I decided to go to Manchester for a few days. My wife accompanied me with our two small children. We stayed in a cheap hotel, and I spent all my days hanging about the Manchester University Computer Laboratory hoping to get another run and generally getting in the way, while my wife had to wash clothes in a small washbasin and wander about the park with the children when the weather allowed. During the week I was there, I made no progress whatever.

Eventually our own Atlas was delivered, and progress was greatly speeded up. A working version of the system was available in a couple of months.

Here I have to report a regret and a blunder.

There were many instances where the data for a problem took several formats. You might be dealing with records of families, with given incomes parents’ ages and number of children, and a separate record for each child. I called this the “family problem”, and spent many hours wondering how best to handle it, without arriving at a solution. I might, indeed ought, to have invented the relational database, but I didn’t. Perhaps my thinking was constrained by having access only to linear storage media. High-capacity disks were not generally available at that time.

My blunder was to code MVC entirely in assembly code. At the time computing was so expensive, and in such short supply, that efficiency was of prime importance, and everyone knew that assembly code was several times more efficient than a high-level language. Besides, my colleagues and I all accepted that just as real men don’t eat quiche, real professionals don’t like effeminate high- level languages and prefer to live on a diet of pure machine code. The outcome was that when the Atlas finally died, MVC died with it. I wish now that I had written it in C, but at the time that language had not yet been invented.

Finally, it is a sobering thought that MVC was firmly a product of its own time. There is nothing MVC could do that cannot be easily duplicated with Microsoft Access, perhaps helped by a few lines of Visual Basic.

1 Duboys’ formula is used to estimate total skin area in patients who have received severe burns

Editor’s note: Following an Oxford Engineering degree, Andrew Colin has held computing positions at Birkbeck College (see Resurrection 5), the University of London Computer Unit and Lancaster University (Resurrection 40) before taking the Computer Science chair at Strathclyde. From 1986 he shared his time between the University and the private sector before retiring in 2007. Latterly he has started all over again with an Open University Maths degree and is now researching quantum physics at Strathclyde. He can be contacted at Andrew@crm.scotnet.co.uk.

| Top | Previous | Next |

The London University Atlas was unusual in that much of the cost of the machine was provided by a parallel computer bureau operation - Atlas Computing Service. ACS had its own staff and, under the chairmanship of Sir (as he then wasn’t) Peter Parker we plugged away at earning enough money to pay for Atlas. We were, however, greatly assisted by the academics who provided Atlas with software which we put to revenue-earning use: Fortran V from a team led by Jules Zell, Atlas Commercial Language (ACL) a COBOL-influenced programming language by David Hendry, Tim Denvir and others and, not least, Andrew Colin’s MVC. This is the tale of how MVC didn’t disappear when Atlas slipped beneath the waves, but rose from the dead.

In 1970, armed with some theoretical knowledge of compilers gained from Tony Brooker and Richard DeMorgan at Essex, all of, ooh, two weeks experience of MVC and the overconfident arrogance of youth, I took on the job of implementing MVC for the intended successor machine to Atlas, a CDC 6500. Marketing demanded a change of name. Multi Variate Survey Language (MVSL) was the grudgingly agreed compromise. My partner in crime was Bertie Coopersmith, my then manager, who had been a member of the Fortran V team. Bertie and I put our heads together and agreed an approach. We were both fully committed to the principles of MVC and both seriously impressed by the novelty of the ideas it embodied. The use of a “proper” programming language with if, for and goto statements etc to drive what was essentially a package, enabled the programmer to create a close analogue of the way in which the paper questionnaires were designed. Best of all, the use of variable names in the output tables seemed to be inspired.

We decided that full compatibility was not required. MVC programs tended to be run once, perhaps a few times, and then thrown away. It was the nature of the task. The 6500 threw up several challenges and opportunities. The most obvious was that paper tape handing on CDC equipment was awful so source programs had to be on cards. This forced the compatibility issue. Keywords being identified by underlining wasn’t going to be a runner. Reserved words it had to be. But with spaces allowed in both reserved words and variable names, it was not as straightforward as it might have been.

The 6500 had discs, something the London Atlas never had. This raised all sorts of possibilities for passing information around. And whereas the Atlas Supervisor had just supported a compile and go mode of operation, the SCOPE operating system had a proper command language allowing the possibility of escape from the monolithic approach of a single program which could do everything to a structure in which various specialised software elements could be called upon as the occasion demanded.

We discovered a compiler for Martin Richard’s BCPL language which I used to write the compilers - there were four altogether. So Andrew Colin’s wish that he had written MVC in C almost came true, for BCPL is C’s grandfather. Bertie suggested that, instead of producing object code, I should compile into assembly language (COMPASS) - “it’ll be easier to debug” he said. He was right. And I found I could implement the compilers as a single pass; COMPASS would tie up all the forward references for me. Actually that’s cheating. There were still three passes, but I only had to write one of them. Always cheat if you can. Only later did we discover that the CDC Fortran compiler FTN, did the same.

In an echo of Andrew Colin’s experience, initial progress was slow. Lacking our own CDC machine, twice a week we had two hours machine time on a nearby minimalist 6400. But most of this time was used by the operating system support team who, quite unreasonably in my view, wanted to be able to provide a service on our own machine from the day of delivery.

Although BCPL was a stack-based language, unlike C it had no facilities for heap storage and some form of heap storage is essential for compilers. The ICL 1900- style datum and limit store on the 6500 didn’t help. I implemented a heap area at the base of the stack and, every time it needed to be expanded arranged for a BCPL function to be called which, having recursed several times, returned, not back down the recursion stack, but back to original point of call, the return address on the stack having been nobbled on the way up. Thus was provided the required extra heap space. Not kosher perhaps, but effective. Always cheat if you can.

By the time our own machine was delivered all the list processing primitives, the dictionary (including the reserved words and operators as well as variables and values), the syntax analyser and the greater part of the expression compiler were complete. With hindsight, the early frustrations were beneficial, for the most difficult parts of the compilers, those which required much thought and contemplation, were completed before the mad rush between the 6500’s delivery and Atlas’s demise.

One of the most intriguing features of MVC, at which Andrew Colin has hinted, was that at the start of processing each questionnaire, all the variables were set to “unknown”. Only if the program passed through a statement which set the value were proper values assigned. Any derived numeric expression involving an unknown value was itself unknown. Boolean expressions were a little more complex as (for example) TRUE OR UNKOWN is TRUE, whereas TRUE AND UNKNOWN is UNKNOWN. But what was UNKNOWN ≡ UNKNOWN? I spent a happy afternoon deciding that it was UNKNOWN. The UNKNOWN feature is one which, I have always considered, might profitably be adopted by other programming languages to locate possible errors resulting from uninitialized variables, but I have never seen it replicated.

If handling of UNKNOWN in Boolean logic was a mathematical minefield, it was at least easy to implement, many of the logic operations being handled by lookup. Not so numeric variable processing where, I assumed, I would have to store both the value and a KNOWN/UNKNOWN marker, until I discovered CDC’s floating point value INDEFINITE. INDEFINITE was an exponent bit pattern which, it could be arranged, propagated itself in calculations in precisely the same manner as MVC’s UNKNOWN. It could also be arranged that INDEFINITE could not arise by accident. Job done!

What else changed? Well, there were two major changes already implemented on Atlas by a person or persons unknown. Polybin variables were multiple-choice like polylogs, but the respondent could choose any number of the possible answers. Think “What newspapers have you read this week?” rather than “What is your favourite newspaper?”

And for smaller surveys, reading cards and accumulating tables could be accomplished in a single pass, without an intermediate data tape. Both were duly replicated in MVSL.

I added ALGOL 60-style compound statements but not block structure which would have been unhelpful in a largely declarative language. It improved readability no end. I decided to allow the use of general expressions rather than just constants in various places. That wasn’t sufficiently useful to justify the effort involved.

The computer industry seemed unable to agree on the naming of the two top rows of an 80-column card: + and -, 10 and 11, X and Y all had their adherents. Andrew Colin had called them u and l (upper and lower). I added to the confusion by going for -2 and -1 which enabled me to identify them numerically. I still think -2 and -1 the most logical choice. Nobody else does.

Our customers tended not to use sophisticated mathematics. Market research rather than scientific discovery was their interest. I omitted all of MVC’s built-in maths functions, but included an interface for calling user-written Fortran subroutines. This proved most useful, but not for its anticipated purpose.

I implemented the run-time library in assembler and the program for generating the tabular output in Fortran with help from the Atlas Computer Lab at Chilton. Sometime in 1972, we started our first customer application by re-hiring an experienced MVC programmer, Alan Joy. Alan’s programming style was not the same as mine and he found problems I had missed. He tossed error reports across the office with alarming frequency. I tossed back clearances as rapidly as I could. Soon the system started to look respectable and the reports slowed to a trickle. Business started coming in. We stopped using MVC on Atlas before the switchoff date. One memorable but dramatic week 80% of the CDC machine billings were earned by MVSL.

There was one important matter of which both Bertie and I were in compete ignorance. Just down the road in BARIC’s Newman Street offices, we had a business rival. Indeed an Atlas-based business rival. For Ferranti/ICT/ICL had implemented a competitor package - Atlas General Survey Program (AGSP). AGSP had some similarities to MVC, but was rather different in detail. It was run on the Manchester Atlas. When that machine closed we were approached by BARIC in the person of Arthur Sank whose baby AGSP was. “Would we” Arthur asked “provide BARIC with machine time on our Atlas?” We would. And we continued to do so until our Atlas shut. At which point Arthur took himself off to Chilton until that machine also met its maker. But Bertie managed to convince Arthur that MVSL could be a viable option for BARIC when the time came. Arthur requested some extra facilities to make MVSL more AGSP-like before he would agree. One of these looked difficult. Bertie and I concluded that the requirement could be met by a source-code pre-processor in the form of a rather eccentric macro generator. Arthur agreed that it might fit the bill.

All that remained was to actually write it. I was up to my neck in development. But we had a macro-generator tool which was put to use. Test programs were run which took between 50 and 100 times longer to pre-process than they did to compile. Nothing for it but to start again. Bertie proposed that he should draw a flowchart, I should code it in BCPL and he would debug it. Two weeks later he handed me a dozen sheets of lineprinter paper sellotaped together. “Sorry it’s a bit scrappy” he said, “I was doing in the bath last night.” I duly coded it and handed it over. A week later it was starting to work. Bertie handed it back to me for review. Readers will recall that Bertie’s background was in Fortran. He’d peppered the code with GOTO statements. Horror! Mindful of Dijkstra’s famous paper, I removed all the GOTOs. We went on like this for a couple of happy weeks before handing it over to Arthur who pronounced himself more or less satisfied.

Arthur and I worked closely together for some time. Since Andrew Colin has brought his lady wife in the story, so will I. When Arthur was out of the office, his phone was usually answered by a young lady who worked in his team. When she and I fetched up at ICL’s Hartree House in 1975, she knew who I was. I’d not the foggiest who she. She had the advantage of me. In so many ways, she still has.

The first thing which will strike the reader as odd is the assertion that a Hollerith punched card is not only a medium for the conveyance of 80 characters of information, but can also be seen as a carrier of 960 bits. MVSL uses cards in both modes but, as so much survey information is in the form of Boolean or multiple choice questions it was the binary form which was most employed. A single questionnaire was transcribed onto one or more punched cards.

The input language defined a procedure for reading all the data for a single respondent. For the most part that was a matter of naming the individual data elements and mapping them onto locations on the input card(s). However as we have seen, MVSL allowed data not present on the input cards to be derived from data which was. And just as some questions on a questionnaire might be omitted, so the program might omit the reading of some data elements using the usual repertoire of imperative statements found in conventional programming languages.

The input language was (eventually) compiled to object code by its compiler which also output the dictionary to a file. The object code was run reading all the cards and writing the resulting data as fixed-length, tightly-packed records to a file of accepted data.

Any rejected questionnaires would be reported upon and the user had the option of correcting and recycling the data, adding to the accepted data file.

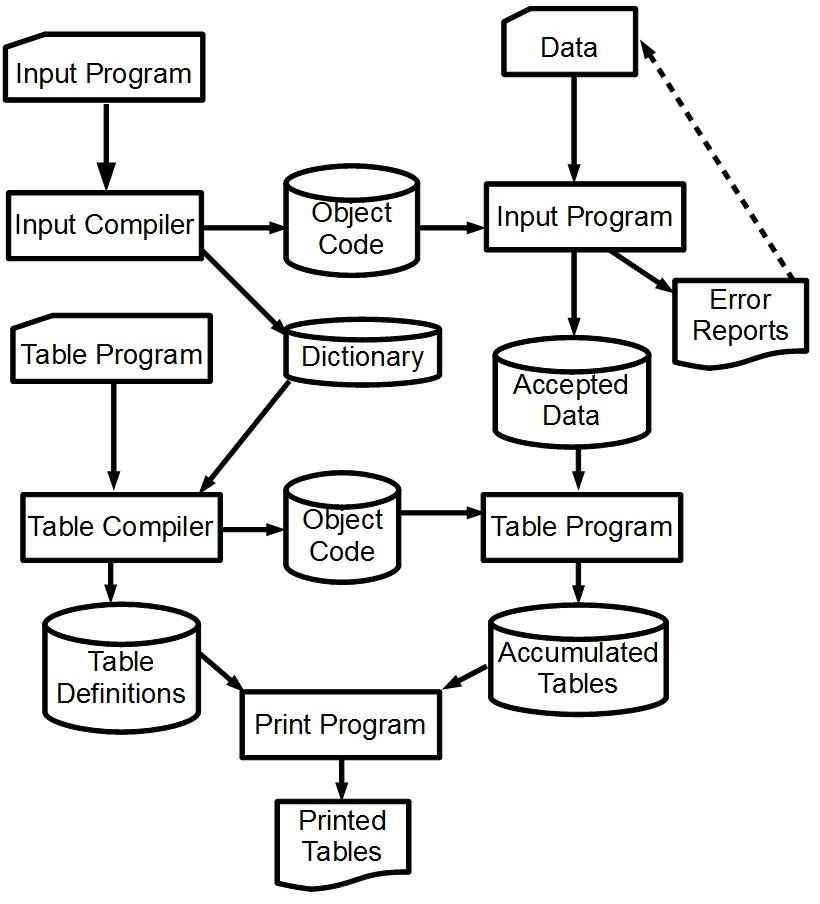

|

|

The table compiler needed not only a set of user-written table specifications, but also the dictionary saved from the input compilation. Information about table definitions was saved to a file and object code produced. The latter was run, reading the accepted data file case by case and adding values (usually one) to elements of an initially zeroised area set aside for the accumulation of table data. At the end of the run, the table data was output to a file. Finally the print program married the tables and the table definition file and created the printed tables.

Alternatively both object codes could be run together, in which case the accepted data file was omitted and data recycling could not take place.

Despite our best efforts, London University Computing Services (as ACS had become) failed to prosper. The days of easy money being made in the bureau business had passed. In late 1974 we were in deep trouble. With huge regret I decided that I couldn’t afford the insecurity and left for pastures new. Shortly afterwards the company fell into the hands of its creditors and thence through various ownerships. I’m given to understand that MVSL carried on earning money for a few years. But it was locked into punched card technology in a world which increasingly wasn’t. I assume it really did die at last. Perhaps it’s still out there somewhere in some twilight world of software. If not, I still have the manual, all the source listings, a source tape and a pack of cards. You have been warned!

Editor’s note: Dik Leatherdale was an early computer science undergraduate at both Manchester and Essex Universities before setting out on the adventure here described. 20 years as a licensed troublemaker in ICL followed. In 2008 he took on the position of Hon. Editor of Resurrection. This article is therefore, the work of a single hand. The author is unable to blame the editor for its many shortcomings. Nor indeed, vice-versa. Both can be contacted at dik@leatherdale.net.

| Top | Previous | Next |

The book review in Resurrection 51 for the historical monograph on Computing in Canada mentions a section on Ferranti Canada and the FP 6000 computer. Although the FP 6000 is covered in only two pages, more is available elsewhere.

The FP 6000 is significant for UK computer history because it became the basis for the successful ICT 1900 range. It was developed by a small team in Toronto and took advantage of the knowledge and technology in the UK parent company.

The hardware used circuit design and technology developed at Wythenshawe for the Argus computer. The FP 6000 had a multiprogramming executive which was an advanced feature for a medium sized computer at that time and was similar to what had been developed earlier in London for the larger Ferranti ORION computer. For more see the Wikipedia entry for Ferranti-Packard 6000.

For a comprehensive history of computer development by Ferranti in Canada there is an article in the IEEE Annals of the History of Computing (Vol16 No2 1994) by John Vardalas titled “From DATAR to the FP 6000”. This can be found from the Wikipedia entry above.

Ferranti gave its Canadian subsidiary considerable freedom and support, as well as a culture of engineering innovation. John Wilson sets this out from a UK perspective in his book “Ferranti: a History - Building a Family Business 1882-1975” (ISBN 1-85936-080-7).

The FP 6000 development leapfrogged UK developments on medium size commercial computers. The FP 6000 gave a proven system design which was extended into a fully compatible range of small and large computer products by further product development by ICT using teams at Manchester, Stevenage and Putney. These teams had different backgrounds - Ferranti, ICT, EMI, and it is unlikely that they would have come up with such a range of compatible systems without the FP 6000 as a starting point. Two FP 6000 machines were shipped to ICT in the UK, one for the hardware and executive development at Manchester, and one for software development at Putney.

The FP 6000 enabled ICT to quickly introduce and deliver its new 1900 range of UK manufactured computers. The 1900 range was very successful and was the mainstay of ICT’s business in the late 1960s.

| Top | Previous | Next |

John Aris was director of the National Computing Centre (NCC) from 1985 to 1990, where he championed the importance and the skills of computer users as distinct from computer manufacturers.

In 1958, he joined LEO Computers which had built and put to productive use the world’s first business computer.

After a few years of programming, he moved into systems design and the uneasy borderland between business computing and business proper. When a series of mergers brought about the formation of ICL (Aris had by then worked for five British computer companies without changing jobs) he had the unusual experience of explaining to the board of the new company what, in the eyes of its customers, its products were for.

He left ICL in 1975 to join Imperial Group as head of computer development. Computing was then still thought of as a suppliers’ world, with expertise primarily in the hands of IBM and the seven dwarfs. However, on moving from a supplier to a user company, Aris discovered that what he had thought he knew about users was not wrong, but was seriously incomplete.

As a result, he became devoted to developing and promulgating the experience and knowledge of users, both within Imperial and more widely. In the process, he recognised early, in the late 1970s, that the future of business computing lay more with smaller, cheaper machines than with mainframes, and with widely available pre-programmed applications rather than custom-built software. He was one of the first computing managers in the world to oust a mainframe in favour of end-user-managed minis and to encourage the advent of personal computers.

In 1985 he was invited to become the NCC’s full-time director and CEO.

John Aris was one of a talented group headed by David Caminer, the extraordinary Lyons’ manager who created business systems engineering from scratch. Together they wrote a book on the LEO (Lyons Electronic Office) story. The book ran to American and Chinese editions. In 2001 the group organised a two-day celebration in the London Guildhall to mark the 50th anniversary of the world’s first business computer system.

He retained his interest in LEO to the end as an active trustee of the LEO Foundation. In CCS we knew him as an erudite contributor to many meetings whose input was often sought and always valued.

| Top | Previous | Next |

| 20 Oct 2010 | Tales of the Unexpected: an alternative history of computing |

John Naughton & Bill Thomson |

| 18 Nov 2010 | Konrad Zuse and the Origins of the Computer |

Horst Zuse |

| 16 Dec 2010 | Historic Computer Films - Note the venue is the DANA Centre (see below) |

Various |

| 20 Jan 2011 | Cognitive Fluidity and the Web | George Mallen |

| 17 Mar 2011 | Blacknest | Alan Douglas & John Young |

London meetings take place in the Director’s Suite of the Science Museum, starting at 14:30. The Director’s Suite entrance is in Exhibition Road, next to the exit from the tunnel from South Kensington Station, on the left as you come up the steps. The Science Museum’s DANA Centre is in Queen’s Gate on the corner of Imperial College Road.

Some of the 2011 events have not been finalised. Please reserve the following Thursdays in your diaries : 17th Feb, 21st Apr and 19th May.

Queries about London meetings should be addressed to Roger Johnson at r.johnson@bcs.org.uk, or by post to Roger at Birkbeck College, Malet Street, London WC1E 7HX.

| 26 Oct 2010 | Ferranti Argus: Bloodhound on my Trail: Open Innovation in a Closed World (18:30 - 21:00) |

Jonathan Aylen |

| 16 Nov 2010 Note corrected date |

The LEO Story | Gordon Foulger |

| 18 Jan 2011 | The Harwell Dekatron Computer | Kevin Murrell & Tony Frazer |

| 15 Feb 2011 | Is it 0 or 1? A Look at Past and Future Methods for Physical Data Storage |

Steve Hill |

| 15 Mar 2011 | The Evolution of Radar Systems, Computers and Software |

Frank Barker |

North West Group meetings take place in the Conference Room at MOSI - the Museum of Science and Industry in Manchester - usually starting at 17:30; tea is served from 17:00. Queries about Manchester meetings should go to Gordon Adshead at gordon@adshead.com.

Details are subject to change. Members wishing to attend any meeting are advised to check the events page on the Society website at www.computerconservationsociety.org for final details which will be published in advance of each event. Details will also be published on the BCS website (in the BCS events calendar) and in the Events Diary columns of Computing and Computer Weekly.

MOSI : Demonstrations of the replica Small-Scale Experimental Machine at the Museum of Science and Industry in Manchester are expected to resume from mid- December 2010 (but check MOSI’s website).

Bletchley Park : daily. Guided tours and exhibitions, price £10.00, or £8.00 for concessions (children under 12, free). Exhibition of wartime code-breaking equipment and procedures, including the replica Bombe and replica Colossus, plus tours of the wartime buildings. Go to www.bletchleypark.org.uk to check details of times and special events.

The National Museum of Computing : Thursday and Saturdays from 13:00. Entry to the Museum is included in the admission price for Bletchley Park. The Museum covers the development of computing from the wartime Colossus computer to the present day and from ICL mainframes to hand-held computers. See www.tnmoc.org for more details.

Science Museum :. Pegasus “in steam” days have been suspended for the time being. Please refer to the society website for updates.

North West Group contact detailsChairman Tom Hinchliffe: Tel: 01663 765040. |

| Top | Previous | Next |

| Chairman Dr David Hartley FBCS CEng | david.hartley@clare.cam.ac.uk |

| Secretary Kevin Murrell | kevin.murrell@tnmoc.org |

| Treasurer Dan Hayton | daniel@newcomen.demon.co.uk |

| Chairman, North West Group Tom Hinchliffe | tom.h@dsl.pipex.com |

| Secretary, North West Group Gordon Adshead | gordon@adshead.com |

| Editor, Resurrection Dik Leatherdale MBCS | dik@leatherdale.net |

| Web Site Editor Alan Thomson | alan.thomson@bcs.org |

| Archivist Hamish Carmichael FBCS | hamishc@globalnet.co.uk |

| Meetings Secretary Dr Roger Johnson FBCS | r.johnson@bcs.org.uk |

| Digital Archivist Professor Simon Lavington FBCS FIEE CEng | lavis@essex.ac.uk |

Museum Representatives | |

| Science Museum Dr Tilly Blyth | tilly.blyth@nmsi.ac.uk |

| MOSI Catherine Rushmore | c.rushmore@mosi.org.uk |

| Codes and Ciphers Heritage Trust Pete Chilvers | pete@pchilvers.plus.com |

Project Leaders | |

| Colossus Tony Sale Hon FBCS | tsale@qufaro.demon.co.uk |

| Elliott 401 & SSEM Chris Burton CEng FIEE FBCS | cpb@envex.demon.co.uk |

| Bombe John Harper Hon FBCS CEng MIEE | bombe@jharper.demon.co.uk |

| Elliott 803 Peter Onion | peter.onion@btinternet.com |

| Ferranti Pegasus Len Hewitt MBCS | leonard.hewitt@ntlworld.com |

| Software Conservation Dr Dave Holdsworth CEng Hon FBCS | ecldh@leeds.ac.uk |

| ICT 1301 Rod Brown | sayhi-torod@shedlandz.co.uk |

| Harwell Dekatron Computer Tony Frazer | tony.frazer@tnmoc.org |

| Our Computer Heritage Simon Lavington FBCS FIEE CEng | lavis@essex.ac.uk |

| DEC Kevin Murrell | kevin.murrell@tnmoc.org |

| Differential Analyser Dr Charles Lindsey | chl@clerew.man.ac.uk |

Others | |

| Professor Martin Campbell-Kelly | m.campbell-kelly@warwick.ac.uk |

| Peter Holland | p.holland@talktalk.net |

| Dr Doron Swade CEng FBCS MBE | doron.swade@blueyonder.co.uk |

Readers who have general queries to put to the Society should address them to the Secretary: contact details are given elsewhere. Members who move house should notify Kevin Murrell of their new address to ensure that they continue to receive copies of Resurrection. Those who are also members of the BCS should note that the CCS membership is different from the BCS list and is therefore maintained separately.

| Top | Previous |

The Computer Conservation Society (CCS) is a co-operative venture between the British Computer Society, the Science Museum of London and the Museum of Science and Industry (MOSI) in Manchester.

The CCS was constituted in September 1989 as a Specialist Group of the British Computer Society (BCS). It thus is covered by the Royal Charter and charitable status of the BCS.

The aims of the CCS are to

Membership is open to anyone interested in computer conservation and the history of computing.

The CCS is funded and supported by voluntary subscriptions from members, a grant from the BCS, fees from corporate membership, donations, and by the free use of the facilities of both museums. Some charges may be made for publications and attendance at seminars and conferences.

There are a number of active Projects on specific computer restorations and early computer technologies and software. Younger people are especially encouraged to take part in order to achieve skills transfer.

| ||||||||||