Uniselector Test Rig

| Resurrection Home | Previous issue | Next issue | View Original Cover | PDF Version |

Computer

RESURRECTION

The Bulletin of the Computer Conservation Society

ISSN 0958-7403

Number 62 |

Summer 2013 |

| Society Activity | |

| News Round-Up | |

| Designing and Building Atlas | Dai Edwards |

| The Compiler Compiler - Reflections of a User 50 Years On | George Coulouris |

| Adapting & Innovating: The Development of IT Law | Rachel Burnett |

| 40 Years Ago .... From the Pages of Computer Weekly | Brian Aldous |

| What Did that Box Do? | Dave Wade |

| Forthcoming Events | |

| Committee of the Society | |

| Aims and Objectives |

ICT 1301 - Rod Brown

As anticipated, “Flossie” our ICT 1301 became homeless around the turn of the year. That’s the bad news. The good news is that she has found (or at least been promised) a new home at TNMoC.

The 1301 project volunteers managed to save, dismantle, prepare and pack the machine prior to shipment away from the farm into storage on the 25th February, beating the deadline of losing the machine forever on the 29th.

The volunteer team achieved this in the face of the weather and incredibly adverse conditions, but despite those conditions are already voting to join in the assembly of the machine when it has a secured place at TNMoC.

The Project has therefore now entered its “winter” rest period for an indeterminate period of time. However the 50th year of the system has sparked interest in various facets of the system and in its emulators.

So work continues to write software to decode the captured images of the magnetic tapes taken to date and further to develop hardware to capture the rest of the magnetic tape images from an ex-LEO ½” magnetic tape transport. This was donated back in the 1980s and was rediscovered in a dusty corner at the 1301 project when we tidied up before the open day in 2012.

This is also a good time to review the project’s current supporter base and those who have collected around the supporters’ website. So whatever happens now, the website for the project will remain and the published detail will continue to be added to.

On a happier note, the shutdown event in December resulted in a request to provide a tour within the Science Museum during March to corporate clients of the museum, a company called R-cubed. This event went well with a donation to the Society promised from a satisfied client.

We have received a request for a presentation on the 1301 including new information about the early London University Senate House team and the work to deliver the early GCE marking system.

As always, please pop back and check at ict1301.co.uk and the CCS site to see coverage of the above rescue event and the latest news on the software recovery and our soon to be working LEO deck.

EDSAC Replica - Andrew Herbert

The EDSAC Project team is now deeply engaged in constructing prototype chassis for several areas of the machine, working to circuit design principles and component layout principles established in our earlier investigations and documented by Chris Burton as project “Hardware Notes”. CCS members interested in having access to them are invited to contact the project manager.

A progress meeting of volunteers was held at TNMoC on Monday 18th March 2013.

|

|

Uniselector Test Rig |

Five “chassis-in-progress” were displayed: clock pulse generator, digit pulse generator, final tank decoder, tank decoder logic and storage regeneration units. (Thanks to Chris Burton, John Pratt, Peter Lawrence and John Sanderson).

Nigel Bennee’s design for the half adder is almost complete and construction of a prototype will begin shortly.

Peter Linington has demonstrated a short delay line (using nickel wire rather than mercury) with an observed error rate of 1 in 1011 (about 1 bit per day). An experimental rig for a half size long delay line is in development.

Andrew Brown has a test rig working to investigate how to download the initial orders from a bank of uniselectors.

A first rack has been produced by Teversham Engineering and erected in Andrew Herbert’s workshop.

Thanks to help from Mike Barker of the British Vintage Wireless Society production problems with lumped delay lines have been overcome. Mike and his BVWS members have been helping Alan Clarke source modern suppliers of vintage appearance passive components (e.g., axial capacitors).

Finally another EDSAC video is in production, this time by Google as part of their series on computer pioneers.

On the funding side of things the project has now spent about 25% of the estimated budget, broadly on track. The Trustees will need to begin fund raising to secure the second half of the budget from the end of this calendar year.

ICL 2966 - Delwyn Holroyd

We’ve suffered power outages on two recent Saturdays at the museum. In both cases one phase was lost at the substation due to blowing the 100A fuse. Following the discovery that our recently installed electricity meter has current measuring capability, we have found that a power survey we undertook last year with clamp meters somewhat underestimated the true load and so we were closer to the limit than we had thought. We’ve been able to re-balance the load somewhat by moving 2966 cabinets between phases - the OCP alone draws 25A and that doesn’t include the Store and DCU cabinets! Even so, on a typical Saturday with most exhibits powered on we’re right at the limit on all three phases. This is something we need to address as more working machines come online, not to mention the power requirements of the EDSAC replica still to come.

Unfortunately the unexpected reliability improvement mentioned in the previous report following a board replacement has not lasted. However evidence seems to be mounting that the trouble may be heat related - certainly operation with the OCP cabinet door open is more reliable than with it closed. A pattern in the “random” crashes is slowly beginning to emerge, which with further analysis may give a hint where to start looking for the problem.

Meanwhile work has continued on the card reader, and it is now able to feed cards using the runout control, which actually exercises most of the mechanism control board logic - it would not continue to feed cards unless appropriate light/dark signals from the photocells were being received at the right time. The next step is to hook it up to a PC via the interface box which I’ve already proven with the lineprinter.

Bombe - John Harper

Shortly we will be carrying out our third GCHQ challenge. This time it is with staff at the “Big Bang” event at the ExCeL Arena.

Shortly we will be having our first planning meeting concerning the relocation of the Bombe to the large accommodation in Hut 11a.

I have now started transferring our Bombe Rebuild website to a better host. It is five years out of date in some areas and needs a major overhaul. Updates to more recent HTML standards have added to the overall effort. I am approaching the half way mark but having started with around 175 pages and 350 images there is still a long way to go.

Harwell Dekatron - Delwyn Holroyd

The machine is now often in operation seven days a week and is being actively used by Chris Monk as part of the TNMoC Learning program. It is noticeable that many visitors to the museum have now heard of the machine prior to arrival and seek it out. It’s proving to be a very popular exhibit with the public.

A fault developed several weeks ago that was causing the machine to either stop or get stuck during an arithmetic operation. This was traced to there being very little margin left between pulses and noise in the circuit that triggers the pulse generator to begin an operation which had made it impossible to adjust to a position where it would work reliably. The characteristics of the trigger tubes seems to vary quite widely depending on temperature and recent triggering, probably more so than would have been the case when new, which was further compounding the problem.

We’ve never been all that happy with the circuit in question and, following a day of investigation and measurement we decided to replace several leaky diodes and change the ratio of a voltage divider to boost the input pulses to the circuit. These changes have had a dramatic effect on operating margin for the circuit and consequent reliability - now two full weeks of problem free operation with no further adjustments being required.

The “whole store” spinning mod I referred to in the last report has now been completed and is helping to keep the Dekatrons in good condition.

TNMoC - David Hartley

A decision has been taken to substantially expand the times when the main TNMoC galleries are open. Recognising that much greater value is obtained from pre-booked tours, and the majority of free-format visitors are concentrated at weekends, the plan is to operate in this manner. This will enable us to adopt a positive “open every day” style. No detail as yet, but we hope to be able to announce in due course.

The Learning Programme, providing educational visits and tours for schools, colleges and universities has completed its first year. With an initial target of 2,000 students, the project has attracted over 3,300 students from over 140 educational institutions in 12 months.

Hartree Differential Analyser - Charles Lindsey

A box of pieces that I removed from the machine during my survey conducted in 1994 has now been found in one of the more obscure storage rooms in the Museum.

So we now have the missing clutch (which still needs investigation of the rough ball bearing which was the cause of its removal from the machine in 1994), plus an adding unit (which I have now cleaned and regreased, but which cannot be installed for lack of gears etc. to connect it) and a “frontlash” unit which we have now installed and which has enabled our circle demonstrations to join up correctly (almost).

The latest problem is a half-nut which is jumping over the threads on its leadscrew in the plotting table. This will necessitate a fairly major dismantling operation to gain access to it.

But it is probably more important to press ahead with installing the speed control for the main motor for which an inverter is being obtained.

|

|

Chris Burton |

Resurrection - Dik Leatherdale

Even since the online version of Resurrection first became available on the Web, Chris Burton has been quietly toiling away creating beautiful, hand-crafted HTML of each edition instead of using the usual packages which notoriously make such a pig’s ear of the job. With his increasing workload, he’s reluctantly handed over the job to your editor. At the March CCS committee meeting a small presentation was made to thank him for his dedication and hard work. Thank you Chris.

But now please welcome Brian Aldous who has bravely agreed to contribute a regular column of news items culled from the pages of 40-year-old editions of Computer Weekly. TNMoC holds the Computer Weekly archive which is being digitised bit by bit (or should that be byte by byte?).

IBM Hursley Museum

On Friday 27th September our new friends at the IBM Hursley Museum have generously volunteered to host the first CCS meeting of the autumn season at IBM’s “stately home”, Hursley House near Winchester in Hampshire. The event promises to be something rather special. As we go to press, details are still being finalised, but there will be two lectures - 100 Years of IBM and IBM Hursley. In addition there are to be guided tours of Hursley House and Park and the icing on the cake will be a visit to the museum. Attendance is limited to 50 souls, or rather, 49 as your editor will certainly be at the head of the queue. First come, first served.

We expect the event to run from 10:30 to 16:00. Lunch is included and, as a consequence, there will be a modest charge.

Because Hursley House is a working site, CCS has to provide IBM with a list of attendees before the event so we are organising a booking system hosted on the BCS website. With luck, by the time you read this, everything will be properly organised. As soon as we can, we’ll post full details on the CCS website at computerconservationsociety.org. An opportunity not to be missed!

|

Once more to the National Museum of Computing in February to attend a lecture given by Brian Randell in the Colossus room. The subject? You might expect The Work of Bletchley Park During WW2 but not quite.

In 2013, we know a great deal about Bletchley Park. In the late 1960s, it was still veiled in deepest obscurity to an extent which is now difficult to comprehend. Brian told the story, not of the Bombe and Colossus as such but the story of how they were rediscovered in the 1970s. A simple question “What did Alan Turing do between 1939 and 1945?” led Professor Randell down a dimly lit path and allowed him to shine a light which, bit by bit, led revealed the story with which we are now all so familiar. A magnificent tale, elegantly told, involving an unlikely cast of characters - Tommy Flowers, Donald Michie, Max Newman, Edward Heath, the Duke of Edinburgh, MI5, the Cabinet Office, Konrad Zuse, John Mauchly and many others.

The lecture is available at tinyurl.com/discoveringBP. Emphatically worth an hour of your time even if you have only a passing interest in the work of Bletchley Park.

-101010101-

John Barrett writes all the way from Sydney to tell us that he has moved the contents of his website dedicated to the English Electric Deuce and its successors to a new home at Staffordshire University’s Computing Futures Museum. A useful resource at (dead hyperlink - http://www.fcet.staffs.ac.uk/jdw1/sucfm/eedeuce.htm).

-101010101-

In 2008, the first computer to exceed one petaflop (1,000,000,000,000,000 floating point operations per second) was launched. Roadrunner was built by IBM and installed at Los Alamos at a cost of $100,000,000. It consumed an outrageous 2.35MW of power.

So why are we telling you this? At the end of March 2013 it was decommissioned, thus becoming history. Replaced by a machine only two years its junior. en.wikipedia.org/wiki/IBM_Roadrunner for more details. How are the mighty fallen!

-101010101-

Following on from Simon Lavington’s article on the politics of Atlas development in Resurrection 61, we consider here the technical challenges in the design of such an advanced computer and consider how they were overcome.

The Manchester Atlas operated from its inauguration day, 7th December 1962, to 30th September 1971 when it was closed down.

Introduction

The two prime movers in establishing the Atlas project were first, Sebastian de Ferranti, who supplied the money and took the risk in line with the Ferranti policy of being “First into the Future”. A Ferranti leaflet dated November 1961 listed Ferranti products and this statement was printed on its front page. It certainly applied to computing and they had a good record in this respect for a number of other products dating from their start up as a company in 1882. The other was Professor Tom Kilburn who spearheaded the design and implementation. His belief in the project and his determination to see it through to a successful conclusion against considerable odds was remarkable. The decision to start the project was taken in late 1958 but the concepts originated from ideas and work already being undertaken by a small team at the University under Kilburn’s direction and identified then as “Muse”. Atlas started to run a computing service from the beginning of January 1963. There is little doubt that without the Ferranti collaboration Atlas would not have been built. Some senior people at the time commented that the whole of the UK would only need one Atlas, therefore there was no need to design and build it and the idea of buying American could be financially more attractive.

From the current situation in digital techniques with small mobile smart telephones and very capable tablets and laptop computers it is very difficult to appreciate the technical position as it was 50 years ago! Then there was a scarcity of storage, the equipment was physically large, constructional problems were difficult involving a lot of work by hand and there was only a limited interest because of cost and the difficulties of providing a satisfactory architecture with adequate performance.

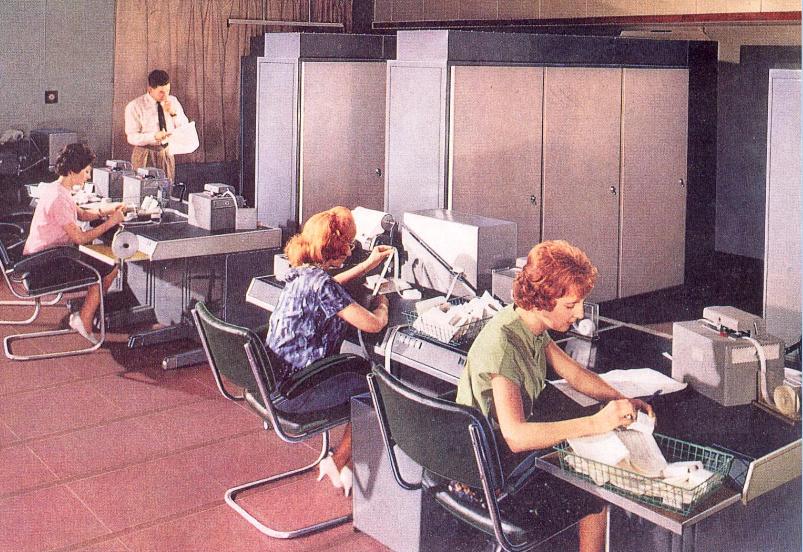

|

The physical size of Atlas is immediately obvious from the photograph here which shows part of the installation at the University. In the centre background two large cabinets can be seen and there were three more in this room. Additional cabinets for the magnetic drum memory, the power supply switching system and eight magnetic tape decks occupied an adjacent room. Yet another room housed peripheral equipment. The large physical size made it difficult to consider the use of a clock so the timing was implemented on an asynchronous basis. This too had problems, in particular selecting an event to activate when other requests can actually occur during the period used to determine the actual selection thus causing smaller pulse widths which may not be adequate to set flip-flops properly for example. This issue was dealt with by designing the mean time between failures for this situation to be the order of several months. The problem is described in more detail and in modern terms by David Kinniment 1. Note also the three computer operators (replacing users) seated at desks with their own peripheral equipment linked into the machine. Atlas was able to multi-task in contrast to previous computers where one user only was in charge of the computer for a specified period. This meant that users now handed their problems over to computer service operators so that the users were no longer there when their problems ran. The job scheduling was determined by Atlas to balance its workload and minimise hold ups rather than a job being carried out at a specific time, though there may have been occasions when it was a requirement for users to be present.

|

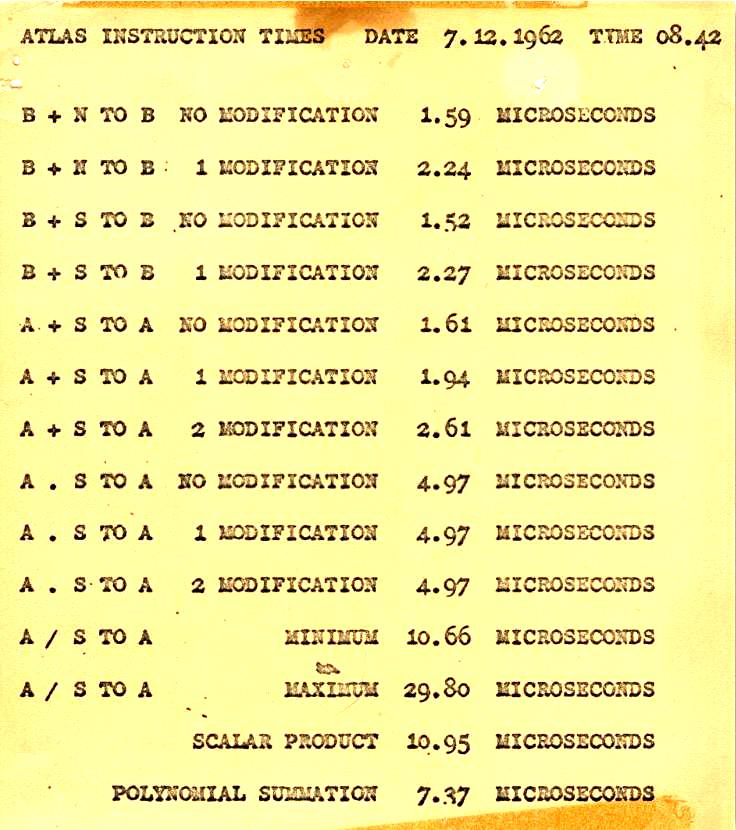

On the left we list the instruction times for a variety of different types of instruction. It is a copy of those printed out at 08:42 on the morning of inauguration day. The first four instructions are those associated with the B-arithmetic unit which operated on half-words (24 bits) and worked in a fixed point way. As in earlier machines an important function of the B-unit was also to provide the option of modifying any instruction address as that instruction is obeyed. There were 128 B-registers some of which had special organisational functions. The other eight instructions worked with the A-arithmetic unit which was a full-word unit with a double word length accumulator register to provide floating point arithmetic including multiplication and division facilities. As each of these units was distinct it was possible to obey B-instructions during the time that the A-unit was still obeying longer instructions such as multiplication or division. This process is termed overlap and in essence, since it also involves extracting instructions to obey before the previous instruction is complete, it is really the start of pipelining but that technique was not pursued very far in Atlas.

Features

The aim of the Atlas design was to provide a step function increase in throughput for user jobs, for example equivalent to 60 to 70 Mercury computers. Note that a single Mercury provided the University of Manchester computing service from February 1958 to January 1963 and was also used by others. The full potential of Atlas took about a year to achieve since for example, the peripheral equipment only arrived during a period of three months after the inauguration. Also there was the Supervisor software to develop fully from its initial state and there were language compilers to provide. Atlas was also running a computing service based on Mercury Autocode to simplify the transfer of users’ earlier work to the Atlas computer.

To get the magnitude of performance required the computer had to be completely parallel in operation in contrast to previous machines designed at Manchester which were mainly serial in operation. This meant a significant increase in equipment and therefore cost too. With Mercury costing say £150K and Atlas £3,000K this was a 20 times increase in cost. Note however that Atlas provided a factor of 60 times improvement in throughput so that cost per smaller job done is reduced by a factor approaching three and of course Atlas made feasible the execution of much larger jobs in an acceptable time.

Multi-tasking also helped to improve job throughput and this is explained simply as follows. On Mercury a user would book a period of use, first spend some time inputting data, followed by computing time and then a time for getting the requisite output. In practice these three time slots, of input, compute, then output would differ from problem to problem but since the peripheral equipments were relatively slow I am going to assume all three times were identical for simplicity. The processor time the computer needed to either control the acceptance of data from or provide data to the peripherals was quite small so that it was mainly idle during the input and output time slot times which were defined by the amount of data involved and the peripheral speed of operation. If during the computation time the computer could be arranged to input the data for the next job to be run and also output the data for the previous job run then the computation time for that user would increase slightly but now computation could be carried out for most of the time available and thus throughput improved. Externally it appeared that the tasks of input, computation and output were all being carried out in parallel but the computer was essentially time sharing its operation for the initiation and control of these activities in sequence.

Ideally in a computer one would like the main memory to be large and provide random access but certainly 50 years ago this was not technically feasible and systems with the best performance were small and expensive. Here it was intended to use ferrite cores for the two microsecond cycle RAM and partially switch the cores for the faster B-store but considerable work was needed to provide this capability. In previous machines such as the Mk1 and Mercury there was a compromise with the available RAM being backed up by a slower access time magnetic drum of larger capacity which was cheaper to provide. The difficulty for the user was that they had to arrange transfers of information between the two levels of storage in such a way that a significant amount of time was not wasted. In Atlas such transfers were arranged efficiently by the computer with the provision of extra hardware and software providing autonomous block/page transfers of 512 words via DMA. To create some space in the RAM a suitable block had to be transferred back to the drum, i.e. the one least likely to be needed for some time. Statistics about the use of all the blocks in the RAM had to be maintained to enable the computer to make this selection and this was done via a piece of software which was called the “learning program”. This system arrangement at the time was known as One Level Storage and essentially it converted user addresses within a large range to be available from a real physical address in the RAM. It also enabled protection to be provided which prevented one program interfering with another. This was the start of the Virtual Memory concept now available in modern computers.

The organisational software for the one level store was provided in a fixed store i.e. a ROM which was also linked to a small RAM providing some working space for this software and the combination was termed the Private Store in Atlas. This private store was not available to normal users and so provided added security to the organisational software. It also contained other software, such as test programs, peripheral drivers, and extensions to the basic instruction set which were called “Extracodes”. Extracodes were essentially subroutines to carry out more complex operations but the entry to the subroutine and the return from it could occur automatically with the help of B-register 121. To facilitate the operation there were two Control registers the MAIN control provided by B-register 127 for normal instructions and EXTRACODE control in B-register 126 to step through the subroutine instructions for Extracode operation. There was also a third Control register in B-register 125 called INTERUPT control which was used to help determine the source of any interrupt signal in the machine which would mainly arise from peripheral equipment requiring attention but also included various error conditions too. Such signals were given a priority of response depending on their ability to cope with time delay and in some cases peripheral equipment could be temporarily halted in operation. It was possible to determine which one of up to 512 interrupt signals required attention with the help of a special facility in B-register 123 in relatively few instructions. Switching between these control registers could be done rapidly and Interrupt control had priority of action over the other two but relatively little time was spent there since it only had to identify the source of interrupt and then hand back an action request to deal with it to the other control registers.

Hardware

At this time semiconductor technology utilising germanium was replacing thermionic devices with advantages in size and reliability. The search was on to get sufficiently fast, reliable discrete transistors to assemble with other components e.g. diodes, resistors, capacitors etc. to make computer logic gates.

Basically there were two types of transistor. One was called a graded base device. It was relatively cheap to make but to operate quickly it always had to have at least three volts between its emitter and collector even in the on condition. This was the type of transistor used to provide the bulk of the gating transistors in Atlas and was the Mullard OC170.

The other type of transistor was called the surface barrier transistor which could operate quickly both into and out of saturation which is a condition with only very low voltage between emitter and collector e.g. 0.1 volts. These devices were made by Philco in the USA and later by Plessey in the UK. However the manufacturing process was quite involved and as a result these transistors were very expensive. However there was the idea that when switched on with such a small voltage drop across them they might be used to transmit a signal in a similar way to a relay mechanical contact provided that the switching condition remained unaltered during the transmission. In a parallel adder there is a condition when a carry signal might have to be transmitted from the least significant digit to the most significant. If this is 40 stages for example then through a conventional transistor gate the transmission time is 40 gate delays, i.e. quite some time. However there was a known logic design for a relay parallel adder, where certainly there is a long time to switch the relays all of which occur in parallel, but then when the contacts are all set the transmission time of the carry signal is only along the length of wire connecting the relays and through their metallic contacts, i.e. very fast. Could the surface barrier transistor provide the fast switching speed required and also fast carry transmission and could the small change in signal level at each stage be accommodated? The answer was yes and this was the type of fast adder/subtractor designed for use in Atlas and, at the time, was the fastest addition available. The transistors used were the SB240 and SN501, the latter having a higher power rating.

Other interesting circuit contributions were made in Atlas in particular to driving and receiving signals with 50 ohm coaxial cable where it was possible to provide an output signal of 2.5 volts from a 9ma drive signal. This was important since there were many bundles of 25 or 50 coaxial cables to transfer signals between separate cabinets and also within cabinets. There was also a peak sense circuit using an electrical delay line which was used to identify the signals received from the magnetic tape and also the magnetic drum systems.

Progress and Problems in Producing Atlas

Professor Lavington in his recent article in Resurrection 61 entitled Atlas in Context has referred to the national interest in developing a high performance computer from early 1957 which arose from the interest of NRDC and the perceived needs of the UKAEA and provides more detail about that situation. However some brief comments follow on the action which took place. A working party was set up and on the 17th March 1958 they visited the Computer Science Department at the University of Manchester to see the independent work there called Muse under the direction of Professor Tom Kilburn. In May they concluded that though the design seemed sufficiently fast they were unhappy with the main storage facilities and the lack of consideration about handling peripheral equipment. They also felt considerable more design effort was needed and expressed concern about the size of the team to do the job even with the help of Ferranti. These comments indicating lack of support were disappointing for the University team but later that year Ferranti decided to develop a computer based on the Muse design and it was then called Atlas. For the Muse team it was fantastic news.

|

|

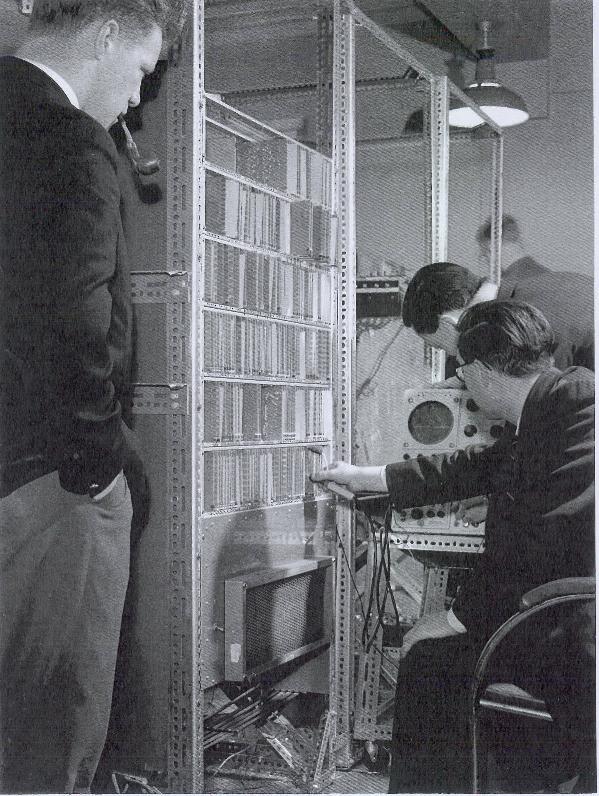

A section of the Pilot Atlas, with boxes attached to a Dexion frame and Gordon Haley, Ianto Warburton and Yao Chen working on the central processor |

The first formal progress meeting on Atlas took place at the West Gorton factory on the 9th November 1959 and it was chaired by Peter Hall the Ferranti Computer manager accompanied by senior staff, Gordon Scarrott and Keith Lonsdale. Tom Kilburn and Dai Edwards attended on behalf of the University and these meetings occurred thereafter at monthly intervals on the second Monday every month until the project was complete. Kilburn outlined the work going on for Muse and Lonsdale described activity on peripherals for the Orion computer and what of this work could be common or at least adaptable for use on Atlas. It was agreed that Muse should now be called the Pilot Atlas and that work should continue to develop it including the connection of magnetic drums and magnetic tape. This development activity continued until February 1961 when the Pilot Atlas was moved to the factory for training purposes and this allowed the University computer room to be refurbished in time for the delivery of the first part of the commercial Atlas in June 1961.

Now going back a bit in time, in early 1960 effort on the project was increased by using the Plessey Company to provide the random access storage (RAM) and the B-store, the Lancashire Dynamo company to provide the power supply and power switching facility and Ferranti Edinburgh to explore the provision of suitable magnetic tape decks from external suppliers. These tape decks were to be common to both the Atlas and Orion computers. All this work was leading edge activity, not straightforward production and some members of the Atlas team were closely involved in these activities; Mike Lanigan with storage, Gordon Haley with the power supply, David Aspinall with the magnetic tape and me with involvement in all three. Naturally there were problems with all these activities but all were solved in an acceptable time.

However there was a problem with the design of the magnetic drums and also the magnetic reading/writing heads which were the responsibility of Ferranti at West Gorton. The heads were to be identical for Atlas and Orion drums but the Atlas drum was to be a development on the Orion drum to run at higher speed - about 4750 rpm, and to have read write facilities from/to 24 tracks in parallel. Eric Dunstan of the Atlas team was responsible for the electronic design together with me and there was liaison with West Gorton to discuss the problems experienced which were essentially mechanical in nature but also involved adequate cooling too. Correction of these was a Ferranti responsibility and a number of improvements were made but the drums still did not operate to the order of reliability required, e.g. there were only two drums operational at the inauguration and not the four specified. These original drums were supplied to both the Manchester and London Atlas but were then replaced by Bryant drums to achieve satisfactory performance for both computers by late 1963. With hindsight this change should have been made much earlier.

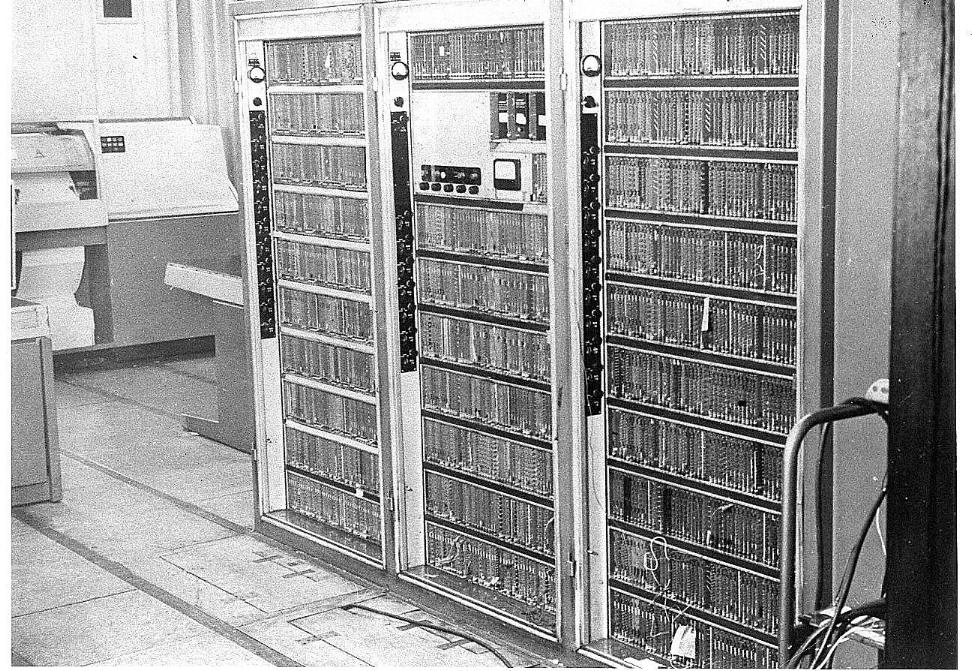

The back of the racks are visible in the photograph above showing the interconnection wiring and it is worthwhile here identifying the scale of the constructional problem. In each rack there were 10 boxes to house printed circuit boards, a maximum of 48 boards per box and each board plugged in to a 32 pin socket. Some of these pins, say five, are for power connections and were linked together on the box via bus bars so they caused few problems. Also not all pins were required so assume that 25 pins remained to be wired, i.e. 25 times 48 per box equals 1200. With 10 boxes per rack and three racks per bay there were now 36,000pins to be connected per bay and Atlas comprised some six bays. All these connections had to be carried out by hand, needed to be done accurately and the joints made properly so that there was a clear need for checking the activity which was also made more difficult since the pin spacing was quite small. The work of connection and checking generally proceeded in successive stages until the job was complete. Unfortunately too all this work had to be repeated for each computer that was made. Compare and contrast this work with the current situation of making a complete computer including memory on a physically small chip where automation plays a large part and takes place in ultra clean conditions.

|

From June 1961 to August 1962 various pieces of Atlas were delivered and commissioned: the random access store was the last. From September 1962 Atlas started to become usable for significant periods of time. Most of the equipment was there for the inauguration in December except for some drums and peripheral equipment, for example the lineprinter which was held up by fog in Idlewild Airport.

After the inauguration various hardware developments occurred - offline data links to Edinburgh and Nottingham Universities and also to Ferranti in London, online data links through privately contracted wires, the provision of a large capacity disc (< 100 Mbytes), a speech converter system and finally an instrument for use by X-ray crystallographers to collect data and then calculate the structure. However the critical effort to make Atlas really usable was the development of the Supervisor, the computer operating system in modern terminology. This took the best part of a full year to achieve its full potential but then the subsequent work done by the Harwell Atlas which was the biggest Atlas was immense. However that is another complete story in itself. 2 3

|

The University of Manchester Atlas was shut down on the 30th September 1971 having provided a good computing service to the University, some other Universities too and also to Ferranti who utilised 50% of the total time available. This last photograph shows a representative group of people involved in the Atlas development and use, in front of the Atlas console. From the left Bill Talbot, then ICL manager at West Gorton, Gordon Black, user and also new head of the Manchester Regional Computer Centre, Tom Kilburn seated, Gordon Haley, ICL engineer on Atlas, and Ken Midmer who was representing Sebastian de Ferranti on this occasion.

This is a text version of the presentation made by Emeritus Professor Dai Edwards at the 50th Anniversary of the Atlas Computer at the University of Manchester on 5th December 2012. Contact at dbgedwards@sky.com.

1Synchronization and Arbitration in Digital systems. D. Kinniment. John Wiley& Sons Ltd 2007

3Evaluation of Operating Systems Brinch Hansen 2000 CiteSeerX pages 7&8

The Ferranti Atlas is chiefly remembered today for its many innovations in hardware and operating systems. But it should not be forgotten that it also boasted a rich repertoire of programming languages and compilers - due in large part to the availability of the Brooker-Morris Compiler Compiler. Here we consider one such which, despite seeing little use at the time, was immensely influential in the longer term.

Background

In 1962 I joined the University of London Institute of Computer Science as an enthusiastic young research assistant. My appointment was linked to the impending arrival of the London Atlas and the perceived need by the senior staff, especially Dick Buckingham and Mike Bernal, for a suitable high-level language to support the development of a wider range of applications. Two teams were already working on language designs at the Institute and I chose to join the one working with a larger Cambridge team led by Christopher Strachey on the design of the CPL programming language. A preliminary specification for the language had emerged in the form of several notes and it was suggested that I look into the construction of a compiler. The story of the CPL project and its eventual demise has recently been documented in David Hartley’s excellent retrospective article 4.

I came to the project with some industrial programming experience but absolutely no knowledge of compiler construction. None of my colleagues was better-placed for the task of constructing a CPL compiler, so I scanned the horizon and the Compiler Compiler popped up - a revelation that was to have a major impact on my early professional life. I have a recollection of attending a talk given in London by Tony Brooker at about that time, but cannot say whether that was the source of my first knowledge of the Compiler Compiler (henceforth abbreviated as CC). Publications by Brooker and his colleagues on the CC were yet to appear.

Textbooks on compiler construction - and indeed on most topics in computing - were non-existent at that time. (Don Knuth is reported to have begun to write a book on compiler construction in 1962, only to realise that it would leave too many related important topics undiscussed - so he transformed the project into his epic Art of Computer Programming.) But with the help of Tony Brooker and Derrick Morris’s concise but instructive documentation and especially their examples, I was able to infer most of the relevant principles and get started on a project that was to occupy me for about two years.

The Compiler Compiler was an early but outstanding example of a domain-specific language. At their best, domain-specific languages give users a clear model of the application domain and a framework enabling them to get started with exploring implementation approaches, converging to a fully working implementation.

The construction of a top-down syntax-driven compiler is now well understood, but for me and probably quite a few others in those early years the CC provided the necessary understanding. One of those was Saul Rosen of Purdue University, who later wrote an excellent tutorial survey of the CC 5. That was well after my need to understand and use the compiler so I was unable to benefit from his efforts. But I have used his survey to refresh my memory for the brief summary of selected aspects below.

Some Interesting Aspects of the CC

To build a compiler for a new language, one began by defining the syntax as a set of format class definitions in a notation that would now be recognised as a homologue of the Extended Backus-Naur notation. The notation was very well-conceived; it was easy to learn and apply and the CC included sufficient low-level primitives (called basic formats) to enable such things as numbers, variable names and so on to be efficiently recognised.

An analysis routine built-in to the CC would process a source program according to the given class definitions and generate an analysis record denoting the syntax tree for the source program in terms of the syntax supplied. The remaining and more difficult task of the compiler writer was to write a set of format routines that would generate executable code corresponding to the statements recognised in the source program. In the case of our CPL compiler we were aiming to generate Atlas assembly code - the use of byte code virtual machines as compiler targets was some years away.

The construction of format routines to perform the code generation was a classic system programming task requiring a deep and detailed understanding of the target machine’s architecture and several key data structures for use as symbol tables, memory maps and so on. The most surprising thing about the Compiler Compiler was that Tony Brooker and Derrick Morris had already encountered the requirements in the process of building the CC (and presumably in their earlier efforts to build compilers for other machines), and they had formulated good generalised representations for them.

Thus the notation used for writing format routines was essentially a system programming language of the same class as BCPL, C and so on. The CC was perhaps the first machine-independent language to explicitly include the notions of memory address, memory word, address arithmetic and indirection. Format routines were in fact executable analysis records. When a CC format routine was processed by the analysis routine the result was an executable tree structure containing references to other routines and markers denoting the parameters remaining to be inserted. To execute a routine a copy of its analysis record was made in a working area of memory similar to an execution stack and when values for all of the parameters have been substituted it is executed. This is strongly analogous to modern interpretive language execution mechanisms. But Brooker and Morris were not content to leave all of the routines to be executed in the interpretive mode. They included a mechanism to translate format routines that did not contain parameters into assembly code for direct execution and this much improved the systems performance.

The CPL1 Compiler

Work on a CPL compiler, dubbed the “London CPL1 Compiler” began in mid-1963 and a working version was released in the autumn of 1964. Initial debugging of syntax class definitions and an understanding of the format routine language were achieved well before the London Atlas had been delivered and commissioned through several visits to Manchester in the autumn of 1963, where the first Atlas was already available for use. Advice and tuition were freely and generously given by Derrick Morris - something I found essential to gaining an understanding of the workings of the CC.

The London CPL1 Compiler was restricted in two ways.

Program size was restricted to a couple of hundred lines.

The nested block structure of CPL necessitated the analysis and initial processing of an entire source program before any code could be generated. Two-pass compilation would of course have been possible but would probably have required operator intervention, since we were still in the era of serial input devices. In retrospect, we ought to have bitten that bullet.

It had some semantic restrictions compared to the full CPL language.

String variables and operations on them were implemented, but without the garbage collection that would have made them more effective and useful. Function closures were a pioneering feature of CPL that I fully appreciated only in the closing stages of the project (through informal interactions with Peter Landin at the legendary “Mervyn Pragnell Logic Study Group” that was running contemporaneously at the Institute). I failed to understand them sufficiently well to define an effective implementation.

Despite those restrictions the compiler enabled a small number of users to experience some of the benefits of CPL - a block structured procedural language with more extensive support for non-numeric data, functions and procedures than any other contemporary language. A paper on the London CPL1 compiler project, illustrated with some CC code fragments, was written and published in 1968 6.

Reflections

The Compiler Compiler was remarkable in many ways. I still find it a quite amazing achievement in terms of the innovations that it contained and the effectiveness of its design and implementation. It encompassed innovative contributions at so many levels, from the very concept of a compiler-compiler to the inclusion of a domain-specific language for applications in system programming and the combination of interpretation with code generation.

Its implementation was completed at a time when we had virtually no debugging tools, with only paper tape for inputting programs, no file storage and certainly no capability for interactive debugging or user interaction. What a tour de force!

George Coulouris joined the Institute of Computer Science, London University in 1962. In 1965 on completing the project described in this note, he became a lecturer at Imperial College and in 1971 joined a fledgling Department of Computer Science at Queen Mary College, until his retirement in 1998. Since retirement George has spent time at the Cambridge University Computer Laboratory working with a research group focussed on ubiquitous and sentient systems. He can be contacted at george@coulouris.net.

4CPL - failed venture or noble ancestor?, IEEE Annals of the History of Computing

5A Compiler Building System Developed by Brooker and Morris, Comm ACM Vol. 7, No. 7

6The London CPL1 Compiler, Computer Journal Volume 11, Issue 1

Technological change, along with social, cultural and political change, affects the content and application of law, the framework of formal rules for society. Although law has to be predictable and certain, it cannot stay static or immutable. It constantly adapts existing law to new situations or creates new law for new circumstances. For IT, traditional law, such as for contract and intellectual property, is adapted and extended. New law is created in such areas as e-commerce and computer misuse.

In English law this adaptation, extension and creation is erratic, by way of pragmatic response to novel events and activities. Contract, copyright, patent and database right, data protection, e-commerce and crime are illustrations of how IT law has developed in response to the growth of the information and technology industries which have transformed society. Significant landmarks include the introduction from the early 1950s of computers for commercial use, the development of the software industry, the PC, digital technology, the internet, the World Wide Web, and most recently, the various channels of social media.

Sources of English Law

Legal systems are based on territory - even for online activities which may be global, such as a sale of goods made from a website or by email. English law is made and changed by means of legislation - Acts of Parliament or statutes such as the Data Protection Act 1998 - and judicial precedent, cases decided by the courts. Many of our primary legal principles have been made and developed by judges interpreting the law in decisions on actual situations. Lower courts, like the High Court, are bound to follow principles established by higher courts, like the Court of Appeal.

The cases may be about interpreting legislation made in Parliament - for example, the courts have made clearer the meaning of “personal data”, the term used in the Data Protection Act - or they may refine decisions made in earlier cases where there is no legislation. Thus in the UK there is no Act of Parliament saying that certain information must be confidential - apart from legislation on personal data. “Confidential information” has been defined and refined over time by judges who decide in the particular circumstances brought before them and, taking previous decisions into account, whether the information under consideration should be specially protected.

As well as Parliament and the courts, in the United Kingdom, as in the other Member States of the European Union (“EU”), it is necessary to take into account the increasing effect of EU law. The overall aim of almost all EU legislation is to harmonise the varying laws within the member states, in order to promote economic and social progress. There is considerable harmonisation of laws relating to IT. To continue with the example of the Data Protection Act, this implemented the EU’s Data Protection Directive in the UK; indeed there is a new Data Protection Regulation currently proposed by the European Commission, which is likely to be implemented in member states in the next two or three years.

Beyond the EU, to facilitate international trade, countries have always made agreements with each other, known as Treaties or Conventions. The Berne Convention is the most widely observed international treaty relating to copyright, originating in 1886, and since adapted to deal with software.

Contracts

In 1969 IBM, then the predominant IT company, first started charging separately for systems engineering, training and software. A distinct software industry emerged with an ongoing need to protect its software assets and to provide software, systems and value added products and services, through contracts.

There is now a myriad of specialised IT contracts for specific purposes. Many contracts focus on transactions and relationships involving various combinations of services, value added components and systems. Hardware, and to some extent software, has become commoditised and can often be supplied on standard terms and conditions. Many IT contracts are for licensing, distribution, marketing, joint ventures and business continuity.

Legislation, often resulting from EU legislation, affects the content of commercial contracts, including those related to IT products and services. Some statutes and regulations, such as those for e-commerce, are especially applicable to IT contracts.

Developing case law has led to other changes to IT contracts. Several disputes have been litigated involving problematic IT projects, originally entered into with enthusiasm and optimism by both supplier and customer. Typical situations which lead to disputes are:

the contractual relationship has broken down because of a mis-match of expectations;

complete failure to supply a system, or a failure to deliver all parts of what had been agreed;

arguments over the extent of the liability by the supplier, and whether contractual limits were justified.

The courts have generally taken a practical approach and it seems as if the individual circumstances and the behaviour of the respective parties have weighed heavily in the interpretation of the legal principles applicable. The disputed agreements have often been made on the supplier’s standard terms and conditions which did not always reflect the actual transaction. But sometimes there has been nothing or very little in writing, or worse still, the dispute has been over whether there even was an enforceable contract in place.

Existing legal principles and laws on contract are adapted to apply them to the facts of an IT dispute, to reach a decision on liability - who was at fault, and what compensation has to be paid to the injured party.

Patent, Copyright and Database Right

Patents are monopoly rights granted a particular territory to enable the owners of novel inventions, capable of industrial application, to benefit from the commercial exploitation of the products or processes. Patent law is applied to computing technologies in the same way as to other inventions in the UK and Europe. Certain kinds of inventions are excluded from patentability. Software “as such” and business methods are more readily patentable in the US, and cannot be patented in the EU and in the UK. But software with a technical effect may be - and frequently is.

Copyright protects original literary, dramatic and musical works, including manuals, graphical content, sound recordings and other recorded performances, which may all be used in IT, including on the internet. It protects the expression of an idea. It is literally the “right to copy”. Therefore copying copyright material without authorisation from the copyright holder infringes copyright. The law, via EU legislation from 1991 and case law, has adapted to protect most software, certainly most application software, by copyright.

Copyright ownership belongs first to the creator of the original work, unless the creator is an employee in which case the employer owns the rights. Ownership must be transferred in writing. Software is normally licensed by its owner to one, or more often to many, users so that the owner retains its valuable asset in its business and can control the use of the software by imposing conditions as part of the licence. Software supplied as a service, remotely, via the cloud, still needs to be licensed to its users.

|

|

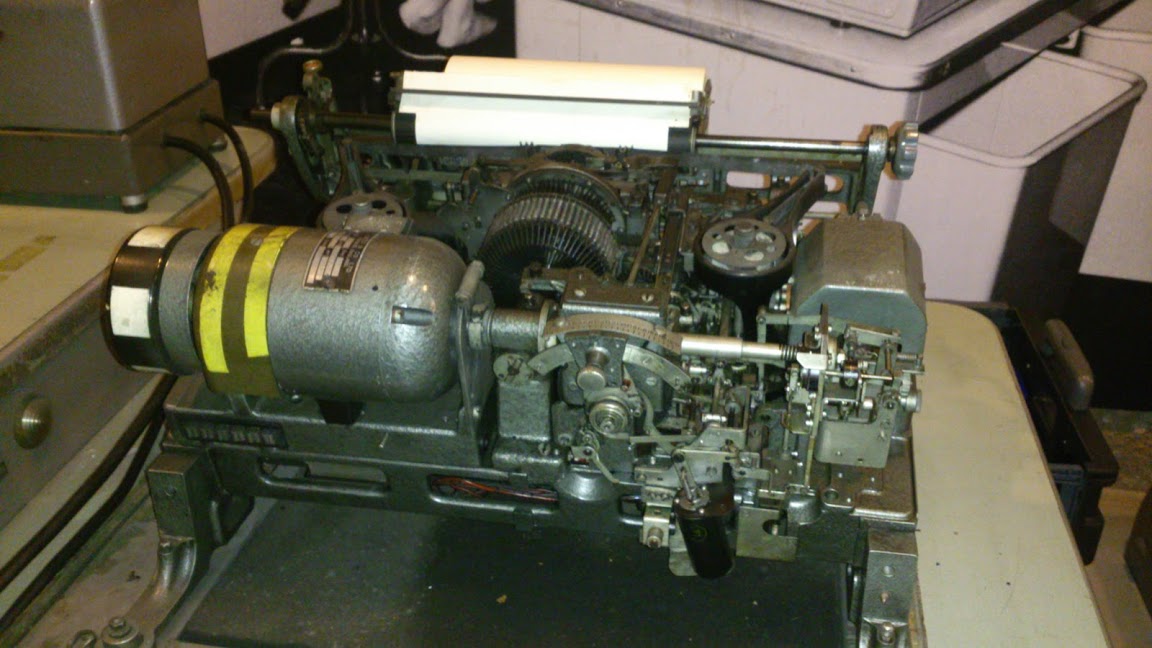

Bill Gates circa 1984 |

The importance of software licensing for profit is illustrated by the operating system for the first IBM PC. In 1980 IBM was in search of an operating system for the PC it was developing. Bill Gates had already bought the rights to an operating system for his company’s use. Microsoft developed the system further and renamed it MS-DOS. IBM became the first licensee, calling the system PC-DOS, for a one-time fee of about $80,000, giving IBM the royalty-free right to use the operating system for ever. This is one of the earliest examples in the software industry of promoting strategic value by means of a give-away bargain, and was the foundation of Microsoft’s success. IBM had not bought the rights. IBM did not have an exclusive licence. Subsequent makers of machines similar to the IBM PC, like Dell and Compaq, also needed MS-DOS. Again, Microsoft licensed it to them - on Microsoft’s terms. The story is related in The Road Ahead by Bill Gates, and in Accidental Empires by Robert X Cringely.

Not all software components are protected by copyright. The business functionality of a computer program and the programming language and format of data files do not constitute forms of expression of that program and so are not protected. Copying the individual command codes entered by a user to achieve particular results - defining commands and their syntax, defining a computer language - is not infringement.

Copyright works may easily be transmitted, downloaded and digitally manipulated over the internet and on social media channels. Copying, including peer-to-peer file sharing to distribute music or films over the internet without the permission of the copyright holder, is illegal. It is often referred to as digital piracy and has been of concern to copyright owners and governments for years.

In publishing copyright material or for content which is defamatory or otherwise illegal, newspapers and other news media are held liable, as well as the author. But the Royal Mail is not held liable for copyright infringement or defamation in the content of letters they deliver. Likewise, intermediary service providers - ISPs - are not generally held to be responsible for the actions of their users. This is a result of EU e-commerce legislation 13 years ago. ISPs will not be liable for transmitting or temporarily storing illegal material, provided that they did not initiate or modify the transmissions, select the receivers, or select or modify the information. If they are made aware of the offending information or illegal activity, they must remove it promptly or disable access to it. For this passive role of ISPs in channelling information or providing access to a communications network, the technical term is that they are a “mere conduit”, that is, they are acting only as intermediaries.

Controversial UK legislation aims to tackle the problem of identifying online copyright infringers, in particular those involved in illegal file-sharing. The largest ISPs who run fixed internet access will be required to warn subscribers whose IP addresses are reported to them by copyright owners as infringing, that they are breaking the law and should stop. For a subscriber who has been warned on more than three occasions within any 12 months, the ISP is required to provide the copyright owner with details to enable legal action to be taken against the infringer. Ofcom has drawn up a Code with the details of how the procedure will work. This law is still evolving to decide where the balance should properly and practically lie; when intermediaries should face liability; and how far they should be forced to co-operate in the process of identifying anonymous users.

Meanwhile copyright owners have succeeded in obtaining court orders to require ISPs to block access to websites on grounds of copyright infringement. BT, one of the ISPs for whom court orders have been made, does not support or condone copyright infringement, but to avoid business exposure and potential liability it requires a court order in order to block a service. Rights holders can therefore prevent proven online infringement by this means and cause the infringing material to be cut off at source. BT prefers this approach, where the defendants have been found in breach of copyright rather than the more interventionist approach.

Over the last 50 years increasing capacities of inexpensive mass data storage and processing power have transformed the collection, collation, dissemination and use of information and turned it into a commodity which may be profitably traded, for example through online commercial databases. The importance of these has expanded since extensive commercial computerisation in the late 1960s and the 1970s, and the development of relational databases. Databases where the contents have been selected or arranged with some kind of intellectual creativity will be protected by copyright. But other databases, such as customer lists, football or other sporting fixture lists, will be protected by the database right. A database right holder can prevent others from extracting or re-using the database contents without permission. This is new law introduced by the EU in 1996, and applies to databases within the European Economic Area.

Data Protection

Increased capacities for computer data storage and processing led directly to laws on data protection. The increasing use of computers in the 1970s prompted concerns about the risks they posed to privacy. In 1981 the Council of Europe Convention established standards among member countries, to ensure the free flow of information among them without infringing personal privacy. This resulted in EU data protection legislation, granting rights to citizens on how their personal data should be processed, and regulating its obtaining, processing, use and storage at law. The UK’s first Data Protection Act, in 1984, applied only to computer-held personal data. The next Data Protection Act, fourteen years later, was not limited in this way, and had the explicit aim of protecting individuals’ rights and privacy. Amongst other things, it specified conditions for processing personal data, tightened restrictions on the use of particularly sensitive information, and set out controls for the transfer of personal data outside the European Economic Area.

Consumer awareness of rights in relation to data protection has increased, especially in relation to marketing emails, the disclosure of bank details, inaccurate personal data and the unauthorised disclosure of personal data, now through social media as well as by more traditional means of communication.

The increased use of the internet, technological advances, such as the growth of cloud computing, and the growth of global e-commerce have all brought fresh legal challenges for privacy. For instance, social networking sites like Facebook have become a major cause of privacy concerns. Changes to data protection law are therefore being proposed in the EU.

Compliance - Increased Regulation

The anarchic early days of the internet could be said to have been dominated by the creativity of technological innovation, people often acting primarily from independent personal curiosity and scientific experiment. Because the technology was so successful and pervasive, commercial and business interests moved in, leading to more and more regulation.

The EU introduced e-commerce legislation back in the year 2000, to provide a basic framework to facilitate the development of “information society services”, harmonising the different regimes across member states, encouraging e-trading and boosting consumer confidence. This is new law specifically to respond to technological innovation. It was this legislation that introduced the exemption from liability for ISPs as mere conduits, discussed earlier.

Providers of online services throughout the EU are regulated in the geographic location where they are “established”. This means the place where the supplier pursues an economic activity through a fixed establishment, irrespective of the location of its website or servers - the “country of origin” basis. The aim is to ensure that service providers need comply with only one set of laws, at least in respect of their status and registration, those with which they should be most familiar - not those of 27 member states.

Providers of information society services must make information about themselves and their activities available online. Information about rights, obligations and dispute resolution for e-commerce must be available, via organisations such as the Citizens’ Advice Bureau. These provisions are intended to meet transparency requirements for consumer confidence and fair trading, and to increase use of e-commerce. However, the extent to which they work in practice across jurisdictions is limited.

An example of legal regulation adapting to technology is in the Companies Act 2006, which superseded previous company legislation to create an up to date code of company law. It recognises that e-communications have become a normal way of doing business, and enables companies to communicate electronically with shareholders by email or via websites, to send out company documentation electronically, to file documents electronically at Companies House, and so on.

Crime

Various crimes relating to IT have been created through legislation.

The Computer Misuse Act 1990 illustrates how new law has been brought into force to criminalise certain activities in relation to computers. New criminal offences were created, including unauthorised access and unauthorised modification, hacking and deliberate corruption or destruction of data. It has since been amended to ensure that attacks against computer data, systems and networks, “denial of service” attacks, and supplying the software tools or data to launch an attack, are now also definitively criminal offences.

Further criminal offences have been created, or existing offences may be committed using IT. For example, the content of websites and e-communications and social media may be illegal by being racist - adapting existing law - or, by containing child pornography - new law.

It is a criminal offence to send any article which is indecent or grossly offensive, or which conveys a threat, or which is false with intent to cause distress or anxiety to the recipient. This law, applicable generally, may specifically be committed by sending electronic messages as well as by more traditional hard copy writing or photographs, such as by “trolling”: emailing or posting online offensive communications in chat rooms and online forums.

Terrorism is an offence of violence for political purposes, including violence for the purpose of advancing any political, religious, ideological or racial cause. It is also an offence to encourage terrorism or disseminate terrorist publications. The offence may be committed by a blogger or other person making a social media posting which encourages terrorism or disseminates terrorist publications. There is a “notice and takedown” regime applicable to electronically published terrorist material. A notice may be served on an ISP or website hosting company requiring removal of the offending post within two days. Failure to remove the material without “reasonable excuse” means that the operator will be deemed to have endorsed the post. ISPs and other intermediaries acting as “mere conduits”, as discussed earlier, or who cache or host third party content, will be protected. If they host offending content, they must remove or block it immediately once they learn that the information is terrorist-related. This adapts the law on terrorism to encompass offences of violence by technological means.

Conclusion

These are a few illustrations to date of how IT law is continually developing in response to technological developments. It is not a smooth evolutionary process.

Change is endemic in the IT industry, and at the same time IT is itself a continuing vehicle for progress. Both IT and law are dynamic; constantly developing; and consistently influencing each other. And laws always do have to adjust to meet changing circumstances.

This article is an edited version of the lecture given on 20th September 2012 at the London Science Museum. Rachel Burnett is Chair of the Computer Conservation Society, a past President of BCS and is a practising lawyer specialising in IT law. Her email address is rb@burnett.uk.net.

Largely denied any part in the US space programme, the UK computer industry made a major breakthrough into the European space programme following a £300,000 order from the European Space and Research Organisation for two mobile Mod One computers to test systems incorporated in the GEOS satellite.

The last, and largest, of the three Atlas 1 computers was formally shut-down.

£1m+ installation of a 1906A at Liverpool University to replace existing KDF9, to provide a three-fold increase in computing power, approved by the Computer Board.

WITCH (aka Harwell Dekatron) put into semi-retirement by Wolverhampton Polytechnic.

The first CTL MiniMod, with 16K words of memory, delivered to NPL to assist with the experimental packet-switched network.

UK’s first complete in-store Point-of-Sale terminal installed at the new Bentall’s store in Bracknell.

NatWest Bank network between Kegworth and London the first UK system to use PCM data transmission, reducing the need for 200 non-PCM wire-pairs down to 14 PCM wire-pairs. It was also the first online real-time connection operating at 48 Kbps.

ICL 2903 small-business system launched at the Hanover Fair.

ICL R&D Dept. studying giant multi-processor arrays.

GEC-Elliott win order for a computer-based dynamic position control system for use in the offshore drilling vessel “Wimpey Sealab”.

World’s first computer-controlled run-up at a nuclear power station at Dungeness B, using two Argus 500s to control two reactor/turbo-generator sets.

The international banking message switching service achieves official status following agreement of 240 banks in 13 countries to participate in SWIFT.

Mirfac language developed by RARDE at Fort Halstead sets out to provide a means of natural expression for scientific programming.

Science Research Council places £1m order for two IBM 370/145s for work in connection with elementary particle physics at Birmingham and Glasgow Universities, where they will replace two existing IBM 360/44s.

ICL System 4/70 installed at Culham Laboratory to provide computing facilities and assistance for power generation from nuclear fusion project.

Announcement of an experiment in Parliament to provide MPs with computer facilities by ICL, with a view to installing an information retrieval system.

Virtually the whole length of the M4 motorway now equipped with traffic warning signals controlled by a dual GEC 905 system at Almondsbury, linked to police headquarters at Kidlington, Devizes, Almondsbury and Cwmbran.

Building equipment SGB Group’s ICT 1301 decommissioned and replaced with an ICL 1904E. Rumours that the 1301 will go to a museum.

Low cost, alphanumeric bar-code reader, the first of its kind in the world, introduced by SB Electronic Systems of London.

Argus 700 series, specifically designed for real-time information systems and data communications networks, announced by Ferranti.

First public demonstration, under the aegis of the Parliamentary and Scientific Committee, of online computer information services to provide MPs with access to contemporary and historical databanks.

UK Home Office evaluating an experimental fingerprint comparison system developed by Ferranti and based on an Argus 500 with 2Mbits of disc storage.

New system for Computer Numerical Control developed by the National Engineering laboratory at East Kilbride using an 8K Minic computer from Micro Computer Systems Ltd.

First all-solid state, non-mechanical, alphanumeric keyboard claimed by Alphameric Keyboards Ltd. of West Molesey.

Ferranti seek Stock Exchange quote.

Memorex sells its 1,000,000th tape reel, to Shell-MEX and BP.

New software package for mapping by computer developed by Applied Research of Cambridge. Called Choropleth, it is available in Fortran and PL/1 and is said to be usable on most computers, with core size 12K 48-bit words.

Programmers and Analysts earn £2000 - £3500. Managers and Marketeers earn up to £7000.

In my role as a volunteer demonstrator for the Hartree Differential Analyser I often have to provide some information and amusement to the visitors while the Differential Analyser is being re-configured.

Usually this takes the form of a short demonstration of the Pegasus Emulator on a Windows/XP PC and a short chat of the other on other large items of equipment in the Computing and Calculations Gallery at MOSI. This includes, in order of age:-

|

|

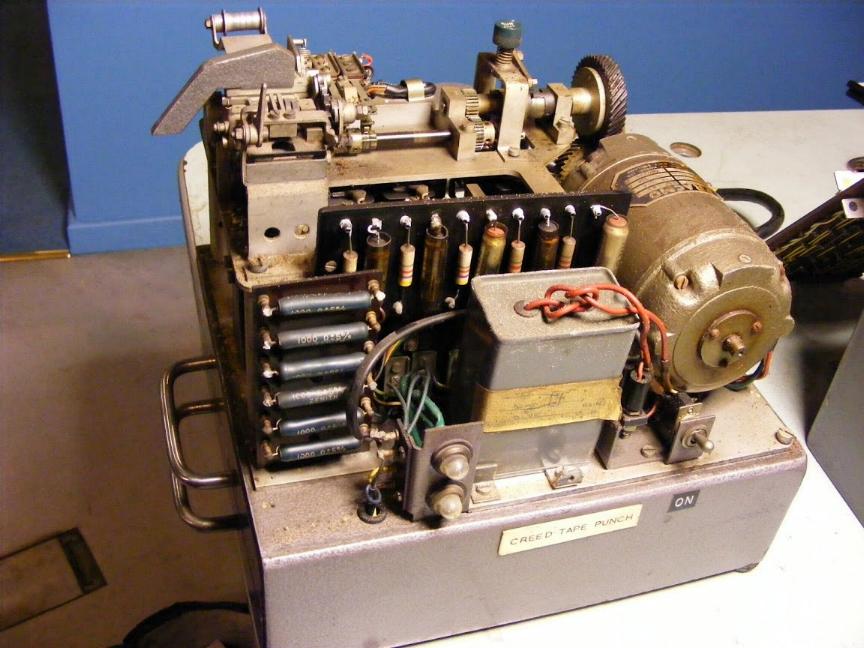

Creed Paper Tape Punch |

At this point I start to feel slightly inadequate as even to a hardened computing history enthusiast like myself, in their current state these things do little to stir the imagination, or to give an impression as to what was involved in computing before the ubiquitous Wintel or Lintel PC. Whilst to an enthusiast the Pegasus emulator on the PC is

interesting, to me it does a poor job of conveying what computing is about. However, whilst the Pegasus is preserved as the “type sample” if we could use the peripherals with the emulator to demonstrate input and output devices we might well be able to get a little more life and motion into the gallery, and the visitors may be able to come away with some pictures of data in motion.

|

|

Creed 54 Teleprinter |

To this end I have started to assess the condition of the Creed equipment formerly attached to the Pegasus with a view to using it for demonstrating paper tape handling whilst using the Windows Pegasus emulator to provide the compute power.

An initial inspection of the equipment has not found any obvious faults with the punch, reader and teleprinter and the next step will be to obtain permission to apply power and carry out some off-line tests.

Dave Wade is a volunteer on the Hartree Differential Analyser at MOSI. Contact at dave.g4ugm@gmail.com.

North West Group contact detailsChairman Tom Hinchliffe: Tel: 01663 765040. |

| 27 Sep 2013 | 100 Years of IBM | Terry Muldoon |

| IBM Hursley | David Key | |

| + Visit to the IBM Museum at Hursley Park - see Society Activity | ||

| 17 Oct 2013 | Annual General Meeting at 14:00 then | |

| to be announced | ||

| 21 Nov 2013 | to be announced | |

| 12 Dec 2013 | The Antikythera Device | Tony Freeth |

London meetings normally take place in the Fellows’ Library of the Science Museum, starting at 14:30. The entrance is in Exhibition Road, next to the exit from the tunnel from South Kensington Station, on the left as you come up the steps. For queries about London meetings please contact Roger Johnson at r.johnson@bcs.org.uk, or by post to Roger at Birkbeck College, Malet Street, London WC1E 7HX.

| 17 Sep 2013 | Early Use of PCs for Business Purposes | Lee Griffiths |

| 15 Oct 2013 | Enigma, the Untold Story: the Intelligence Chase for V1, V2 and V3 | Phil Judkins |

| 19 Nov 2013 | Misplaced Ingenuity; Some Dead Ends in Computer History | Hamish Carmichael |

North West Group meetings take place in the Conference Centre at MOSI - the Museum of Science and Industry in Manchester - usually starting at 17:30; tea is served from 17:00. For queries about Manchester meetings please contact Gordon Adshead at gordon@adshead.com.

Details are subject to change. Members wishing to attend any meeting are advised to check the events page on the Society website at www.computerconservationsociety.org/lecture.htm. Details are also published in the events calendar at www.bcs.org.

CCS Web Site InformationThe Society has its own Web site, which is located at ccs.bcs.org. It contains news items, details of forthcoming events, and also electronic copies of all past issues of Resurrection, in both HTML and PDF formats, which can be downloaded for printing. We also have an FTP site at ftp.cs.man.ac.uk/pub/CCS-Archive, where there is other material for downloading including simulators for historic machines. Please note that the latter URL is case-sensitive. |

Contact detailsReaders wishing to contact the Editor may do so by email to |

| Chair Rachel Burnett FBCS | rb@burnett.uk.net |

| Secretary Kevin Murrell MBCS | kevin.murrell@tnmoc.org |

| Treasurer Dan Hayton MBCS | daniel@newcomen.demon.co.uk |

| Chairman, North West Group Tom Hinchliffe | tah25@btinternet.com |

| Secretary, North West Group Gordon Adshead MBCS | gordon@adshead.com |

| Editor, Resurrection Dik Leatherdale MBCS | dik@leatherdale.net |

| Web Site Editor Dik Leatherdale MBCS | dik@leatherdale.net |

| Meetings Secretary Dr Roger Johnson FBCS | r.johnson@bcs.org.uk |

| Digital Archivist Prof. Simon Lavington FBCS FIEE CEng | lavis@essex.ac.uk |

Museum Representatives | |

| Science Museum Dr Tilly Blyth | tilly.blyth@nmsi.ac.uk |

| Bletchley Park Trust Kelsey Griffin | kgriffin@bletchleypark.org.uk |

| TNMoC Dr David Hartley FBCS CEng | david.hartley@clare.cam.ac.uk |

Project Leaders | |

| SSEM Chris Burton CEng FIEE FBCS | cpb@envex.demon.co.uk |

| Bombe John Harper Hon FBCS CEng MIEE | bombeebm@gmail.com |

| Elliott Terry Froggatt CEng MBCS | ccs@tjf.org.uk |

| Ferranti Pegasus Len Hewitt MBCS | leonard.hewitt@ntlworld.com |

| Software Conservation Dr Dave Holdsworth CEng Hon FBCS | ecldh@leeds.ac.uk |

| Elliott 401 & ICT 1301 Rod Brown | sayhi-torod@shedlandz.co.uk |

| Harwell Dekatron Computer Delwyn Holroyd | delwyn@dsl.pipex.com |

| Computer Heritage Prof. Simon Lavington FBCS FIEE CEng | lavis@essex.ac.uk |

| DEC Kevin Murrell MBCS | kevin.murrell@tnmoc.org |

| Differential Analyser Dr Charles Lindsey FBCS | chl@clerew.man.ac.uk |

| ICL 2966 Delwyn Holroyd | delwyn@dsl.pipex.com |

| Analytical Engine Dr Doron Swade MBE FBCS | doron.swade@blueyonder.co.uk |

| EDSAC Dr Andrew Herbert OBE FREng FBCS | andrew@herbertfamily.org.uk |

| Tony Sale Award Peta Walmisley | peta@pwcepis.demon.co.uk |

Others | |

| Prof. Martin Campbell-Kelly FBCS | m.campbell-kelly@warwick.ac.uk |

| Peter Holland MBCS | p.holland@talktalk.net |

| Pete Chilvers | pete@pchilvers.plus.com |

Readers who have general queries to put to the Society should address them to the Secretary: contact details are given elsewhere. Members who move house should notify Kevin Murrell of their new address to ensure that they continue to receive copies of Resurrection. Those who are also members of BCS, however need only notify their change of address to BCS, separate notification to the CCS being unnecessary.

The Computer Conservation Society (CCS) is a co-operative venture between BCS, The Chartered Institute for IT, the Science Museum of London and the Museum of Science and Industry (MOSI) in Manchester.

The CCS was constituted in September 1989 as a Specialist Group of the British Computer Society (BCS). It thus is covered by the Royal Charter and charitable status of BCS.

The aims of the CCS are to

Membership is open to anyone interested in computer conservation and the history of computing.

The CCS is funded and supported by voluntary subscriptions from members, a grant from BCS, fees from corporate membership, donations, and by the free use of the facilities of both museums. Some charges may be made for publications and attendance at seminars and conferences.

There are a number of active Projects on specific computer restorations and early computer technologies and software. Younger people are especially encouraged to take part in order to achieve skills transfer.

|

Resurrection is the bulletin of the

Computer Conservation Society. Editor - Dik Leatherdale Printed by - BCS, The Chartered Institute for IT © Copyright Computer Conservation Society |